- YouTube Videos to MP3 and Transcriptionby JeremyWhittaker

I find myself listening to videos on YouTube quite frequently where it would be nice to dump them into an MP3 and take them on the road. This script will do exactly that. I also added transcription for analysis. Just put in your link and it will generate the MP3 and TXT file.

- Analyzing Any Polymarket User’s Trades Using Polygonby JeremyWhittaker

Polymarket.com, a prediction market platform, operates on the Ethereum blockchain through the Polygon network, making it possible to analyze user transactions directly from the blockchain. By accessing a user’s wallet address, we can examine their trades in detail, track profit/loss, and monitor position changes over time. In this post, I’ll show how you can leverage the Polygon blockchain data to analyze trades on Polymarket using wallet IDs and some helpful Python code.

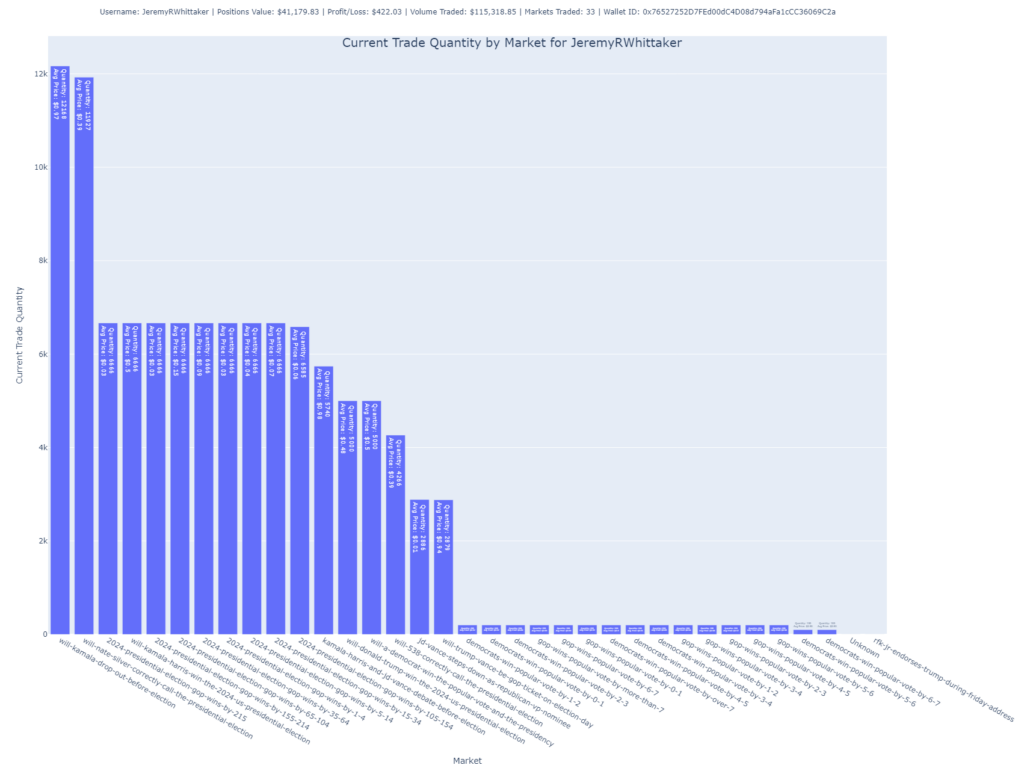

Below are examples of the kinds of charts the script generates and their significance.

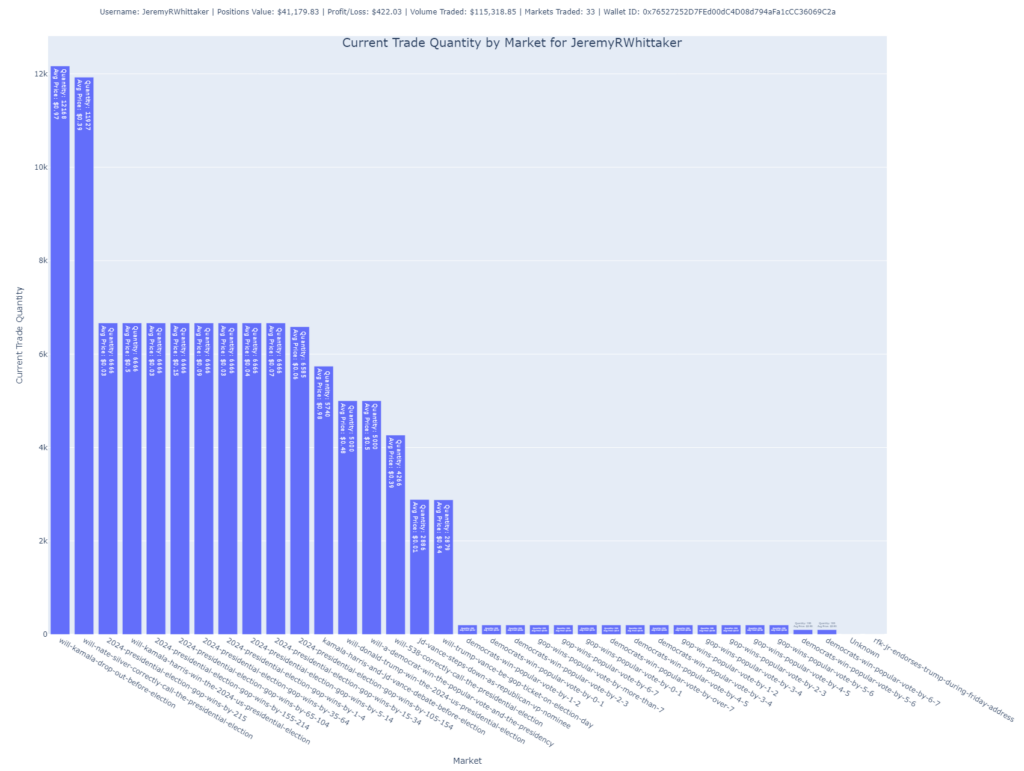

Shares by Market

This chart focuses on the number of shares the user holds across different markets. It’s another way to visualize their exposure to various outcomes but focuses on the number of shares rather than their total purchase value.

Insight: Larger bars suggest higher exposure to specific markets. The average price paid per share is also annotated, providing further context.

Purpose: Useful for understanding the user’s position size in each market.

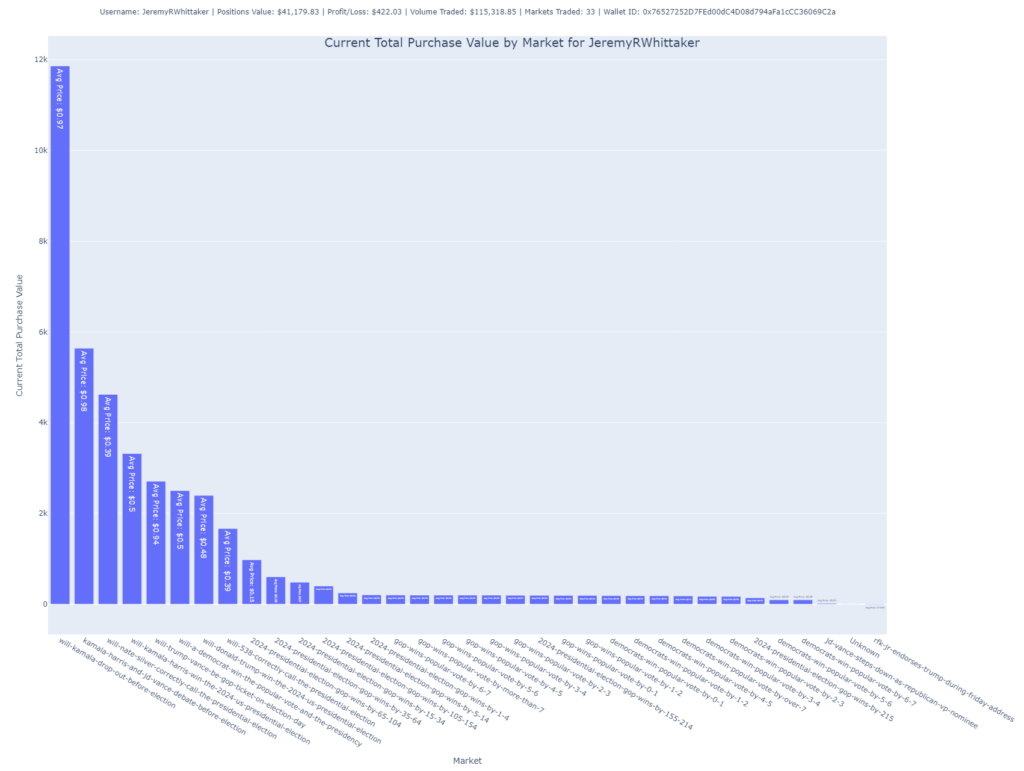

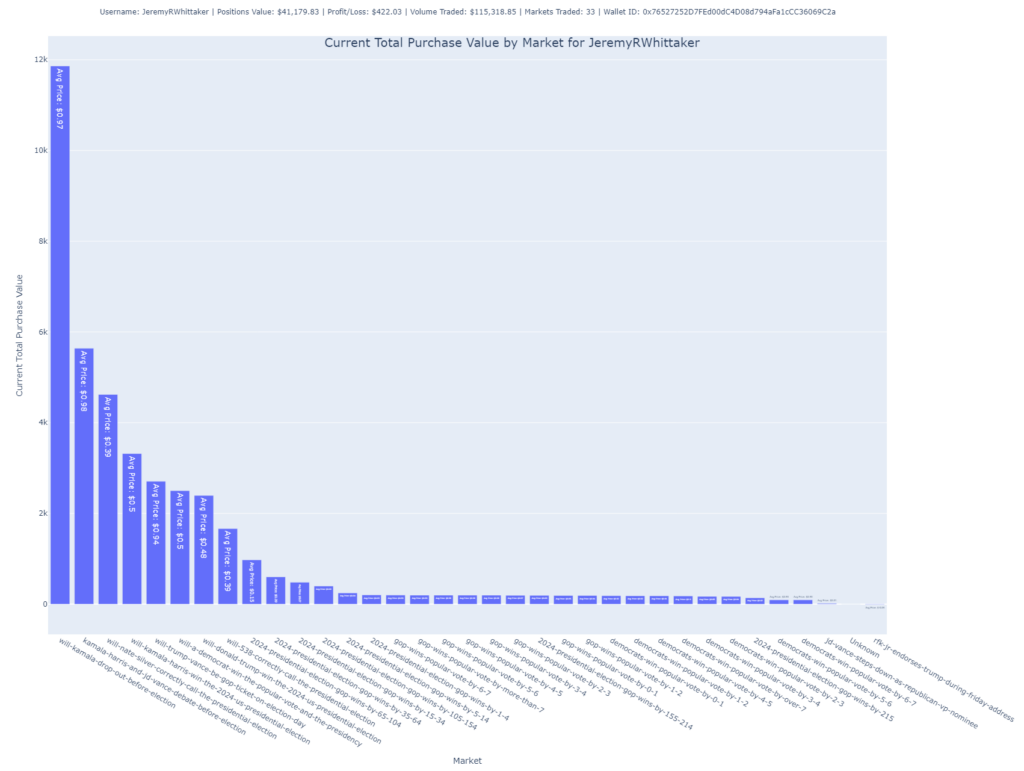

Total Purchase Value by Market

In this bar chart, the user’s total purchase value is broken down by market. The height of each bar indicates how much the user has invested in each specific market.

- Purpose: It allows for a clear visualization of where the user is concentrating their funds, showing which markets hold the largest portion of their portfolio.

- Insight: The accompanying labels provide information about the average price paid per share in each market, helping understand whether the user is buying low or high within a market.

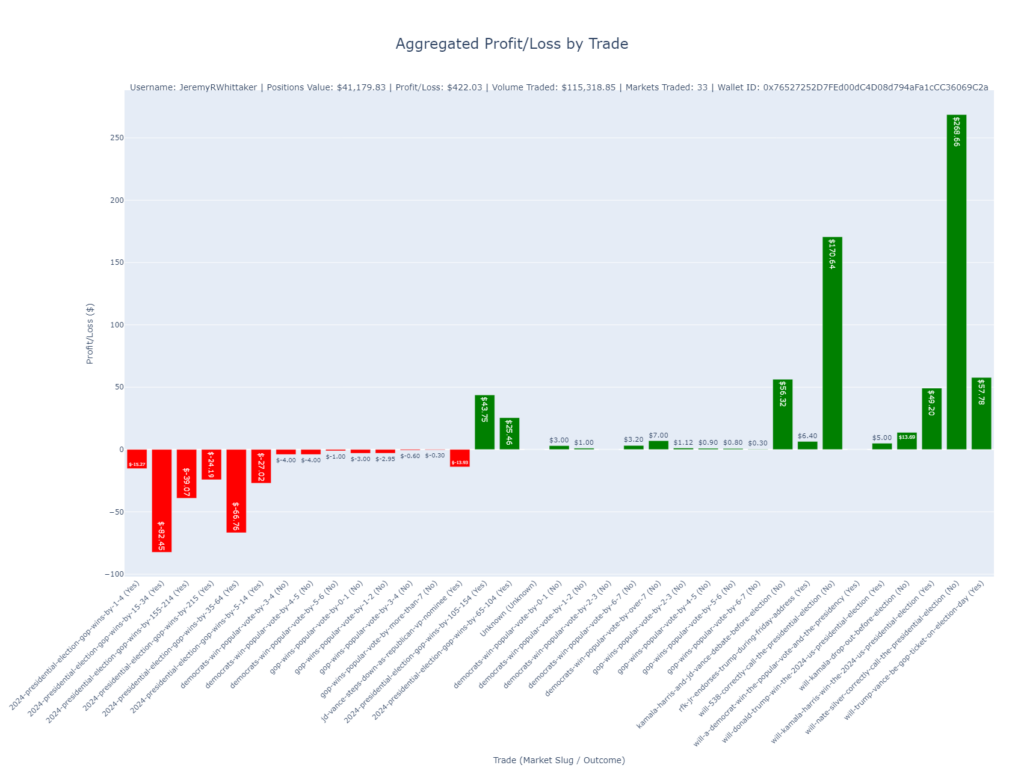

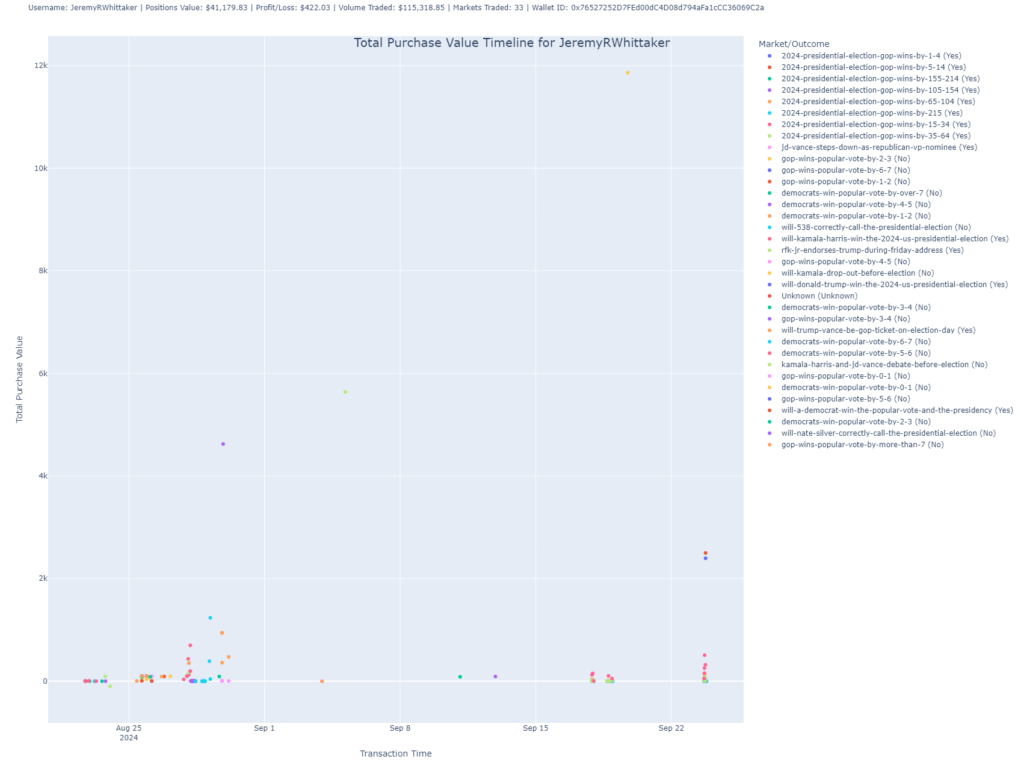

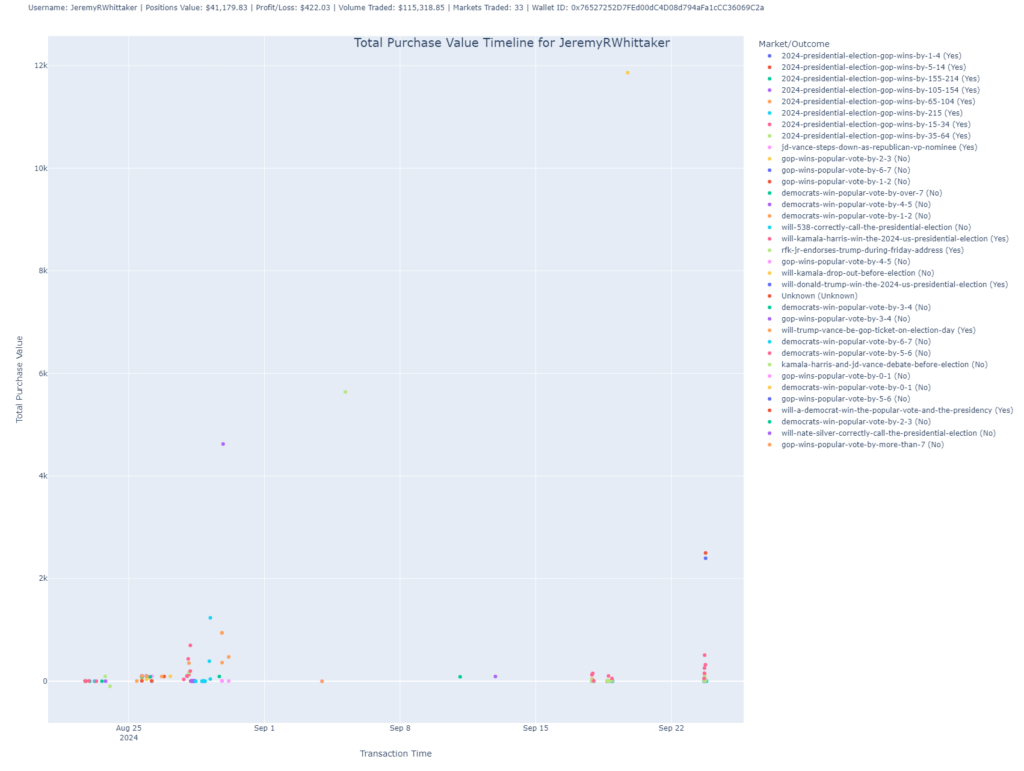

Total Purchase Value Timeline

This scatter plot shows the timeline of the user’s trades by plotting the total purchase value of trades over time.

- Purpose: This chart reveals when the user made their largest investments, showing the fluctuations in purchase value across trades.

- Insight: Each dot represents a trade, with its position on the Y-axis showing the value and on the X-axis showing the timestamp of the transaction. You can use this chart to understand when the user made big moves in the market.

Holdings by Market and Outcome (Treemap)

The treemap provides a more detailed look at the user’s holdings, breaking down their positions by both market and outcome. Each rectangle represents the shares held in a particular market-outcome pair, with the size of the rectangle proportional to the user’s investment.

- Purpose: Ideal for visually assessing how much the user has allocated to each market and outcome combination.

- Insight: It highlights not just which markets the user has invested in but also how they’ve distributed their bets across different outcomes within those markets.

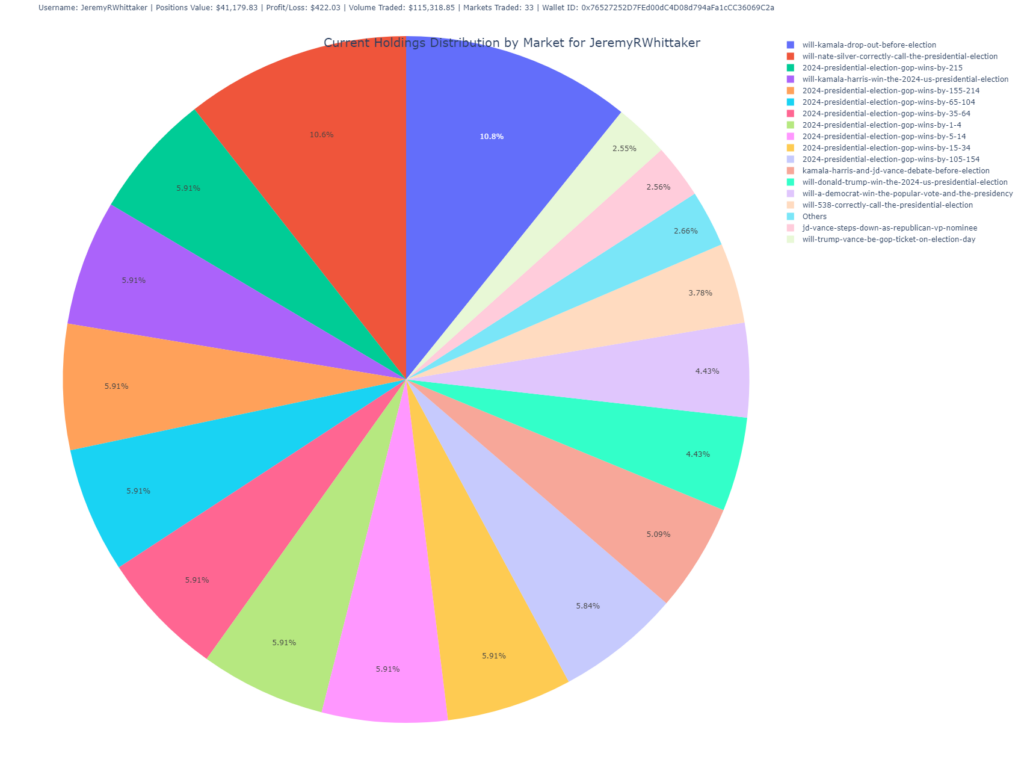

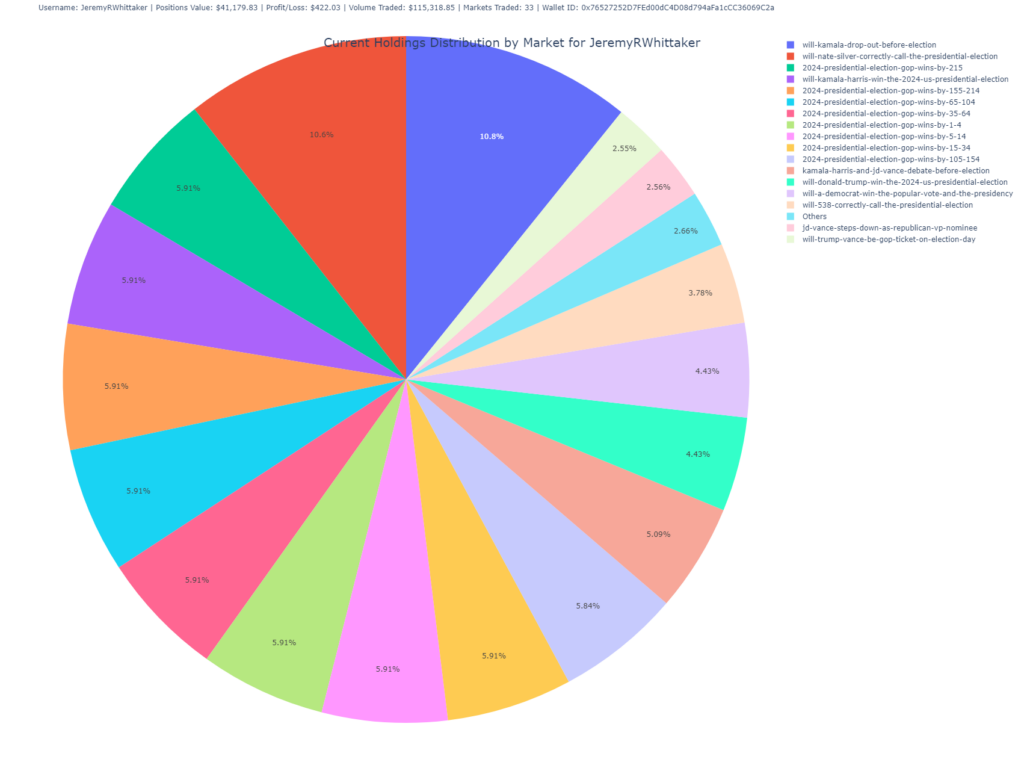

Holdings Distribution by Market (Pie Chart)

This pie chart visualizes the user’s current holdings, showing the percentage distribution of shares across different markets.

- Purpose: Offers a high-level overview of the user’s portfolio diversification across different markets.

- Insight: Larger slices indicate heavier investments in specific markets, allowing you to quickly see where the user has concentrated their bets.

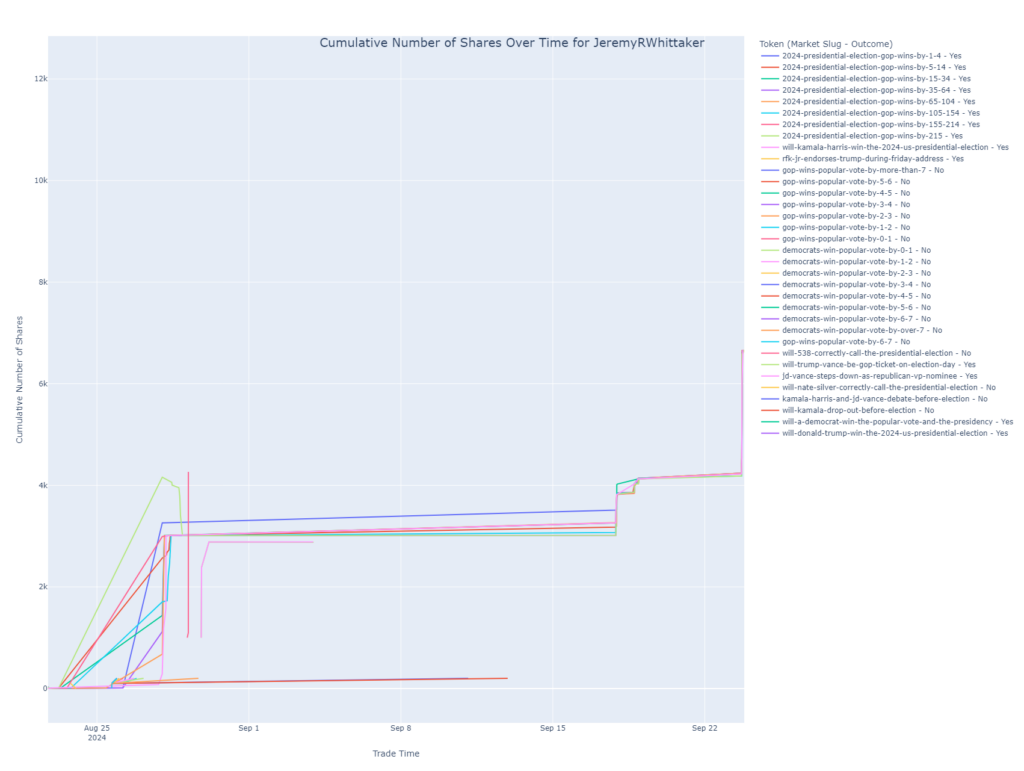

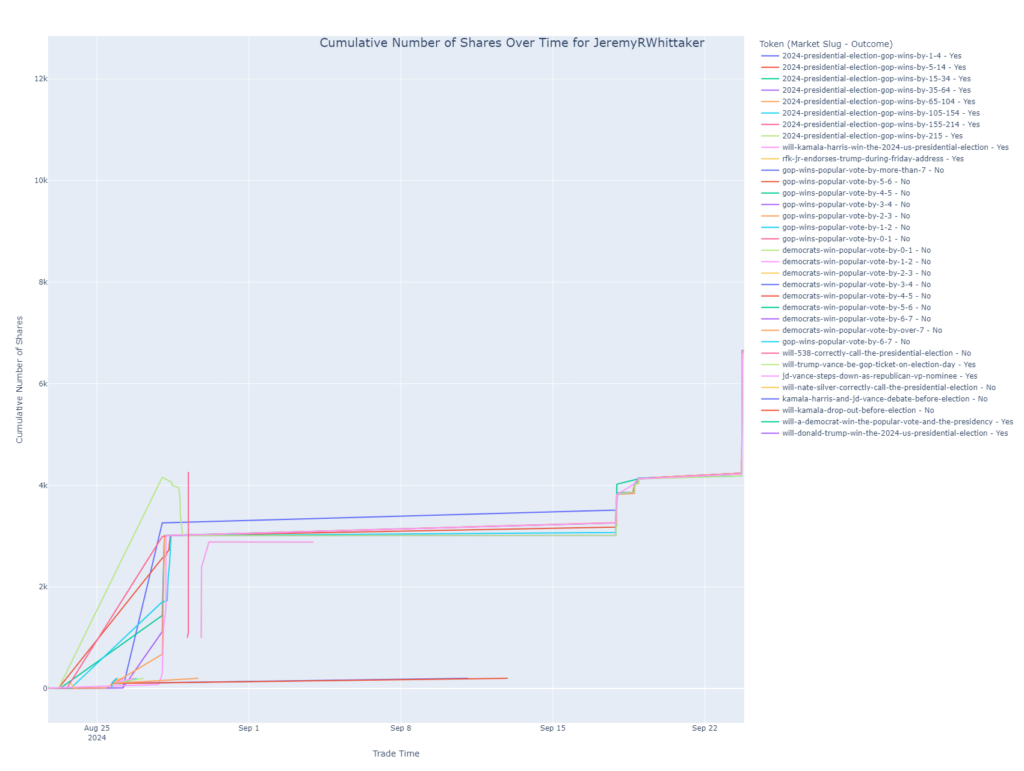

Cumulative Shares Over Time

This line chart tracks the cumulative number of shares held by the user in various markets over time. It helps visualize when the user bought or sold shares and how their position has evolved in each market.

- Purpose: This chart is essential for understanding the user’s trading strategy over time, revealing periods of heavy buying or selling.

- Insight: Each line represents a different market and outcome combination. Peaks in the lines indicate increased positions, while dips show reductions or sales.

The Code

The code behind these charts fetches data from Polygon’s blockchain and processes the transactions associated with a given wallet ID. It retrieves ERC-1155 and ERC-20 token transaction data, enriches it with market information, and generates visual insights based on trading activity. You can use this code to analyze any Polymarket user’s trades simply by knowing their wallet address.

Here’s a breakdown of the main functions:

fetch_user_transactions(wallet_address, api_key): Fetches all ERC-1155 and ERC-20 transactions for a given wallet from Polygon.add_financial_columns(): Processes transactions to calculate key financial metrics like profit/loss, total purchase value, and shares held.plot_profit_loss_by_trade(): Generates a bar plot showing profit or loss for each trade.plot_shares_over_time(): Creates a line plot showing cumulative shares over time.create_and_save_pie_chart(): Generates a pie chart that breaks down holdings by market.create_and_save_treemap(): Produces a treemap for holdings based on market and outcome.plot_total_purchase_value(): Generates a scatter plot showing total purchase value over time.

This tool offers deep insights into any user’s trading behavior and performance on Polymarket.

import os import requests import logging import pandas as pd import subprocess import json import time from dotenv import load_dotenv import plotly.express as px import re from bs4 import BeautifulSoup from importlib import reload import numpy as np import argparse import os import subprocess import json import logging import pandas as pd from dotenv import load_dotenv logging.basicConfig(level=logging.DEBUG, format='%(asctime)s - %(levelname)s - %(message)s') logger = logging.getLogger(__name__) # Load environment variables load_dotenv("keys.env") price_cache = {} # EXCHANGES CTF_EXCHANGE = '0x2791Bca1f2de4661ED88A30C99A7a9449Aa84174' NEG_RISK_CTF_EXCHANGE = '0x4d97dcd97ec945f40cf65f87097ace5ea0476045' # SPENDERS FOR EXCHANGES NEG_RISK_CTF_EXCHANGE_SPENDER = '0xC5d563A36AE78145C45a50134d48A1215220f80a' NEG_RISK_ADAPTER = '0xd91E80cF2E7be2e162c6513ceD06f1dD0dA35296' CTF_EXCHANGE_SPENDER = '0x4bFb41d5B3570DeFd03C39a9A4D8dE6Bd8B8982E' CACHE_EXPIRATION_TIME = 60 * 30 # Cache expiration time in seconds (5 minutes) PRICE_CACHE_FILE = './data/live_price_cache.json' # Dictionary to cache live prices live_price_cache = {} def load_price_cache(): """Load the live price cache from a JSON file.""" if os.path.exists(PRICE_CACHE_FILE): try: with open(PRICE_CACHE_FILE, 'r') as file: return json.load(file) except json.JSONDecodeError as e: logger.error(f"Error loading price cache: {e}") return {} return {} def save_price_cache(cache): """Save the live price cache to a JSON file.""" with open(PRICE_CACHE_FILE, 'w') as file: json.dump(cache, file) def is_cache_valid(cache_entry, expiration_time=CACHE_EXPIRATION_TIME): """ Check if the cache entry is still valid based on the current time and expiration time. """ if not cache_entry: return False cached_time = cache_entry.get('timestamp', 0) return (time.time() - cached_time) < expiration_time def call_get_live_price(token_id, expiration_time=CACHE_EXPIRATION_TIME): """ Get live price from cache or update it if expired. """ logger.info(f'Getting live price for token {token_id}') # Load existing cache price_cache = load_price_cache() cache_key = f"{token_id}" # Check if cache is valid if cache_key in price_cache and is_cache_valid(price_cache[cache_key], expiration_time): logger.info(f'Returning cached price for {cache_key}') return price_cache[cache_key]['price'] # If cache is expired or doesn't exist, fetch live price try: result = subprocess.run( ['python3', 'get_live_price.py', token_id], stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True, check=True ) # Parse the live price from the subprocess output output_lines = result.stdout.strip().split("\n") live_price_line = next((line for line in output_lines if "Live price for token" in line), None) if live_price_line: live_price = float(live_price_line.strip().split(":")[-1].strip()) else: logger.error("Live price not found in subprocess output.") return None logger.debug(f"Subprocess get_live_price output: {result.stdout}") # Update cache with the new price and timestamp price_cache[cache_key] = {'price': live_price, 'timestamp': time.time()} save_price_cache(price_cache) return live_price except subprocess.CalledProcessError as e: logger.error(f"Subprocess get_live_price error: {e.stderr}") return None except Exception as e: logger.error(f"Error fetching live price: {str(e)}") return None def update_live_price_and_pl(merged_df, contract_token_id, market_slug=None, outcome=None): """ Calculate the live price and profit/loss (pl) for each trade in the DataFrame. """ # Ensure tokenID in merged_df is string merged_df['tokenID'] = merged_df['tokenID'].astype(str) contract_token_id = str(contract_token_id) # Check for NaN or empty token IDs if not contract_token_id or contract_token_id == 'nan': logger.warning("Encountered NaN or empty contract_token_id. Skipping.") return merged_df # Add live_price and pl columns if they don't exist if 'live_price' not in merged_df.columns: merged_df['live_price'] = np.nan if 'pl' not in merged_df.columns: merged_df['pl'] = np.nan # Filter rows with the same contract_token_id and outcome merged_df['outcome'] = merged_df['outcome'].astype(str) matching_rows = merged_df[(merged_df['tokenID'] == contract_token_id) & (merged_df['outcome'].str.lower() == outcome.lower())] if not matching_rows.empty: logger.info(f'Fetching live price for token {contract_token_id}') live_price = call_get_live_price(contract_token_id) logger.info(f'Live price for token {contract_token_id}: {live_price}') if live_price is not None: try: # Calculate profit/loss based on the live price price_paid_per_token = matching_rows['price_paid_per_token'] total_purchase_value = matching_rows['total_purchase_value'] pl = ((live_price - price_paid_per_token) / price_paid_per_token) * total_purchase_value # Update the DataFrame with live price and pl merged_df.loc[matching_rows.index, 'live_price'] = live_price merged_df.loc[matching_rows.index, 'pl'] = pl except Exception as e: logger.error(f"Error calculating live price and profit/loss: {e}") else: logger.warning(f"Live price not found for tokenID {contract_token_id}") merged_df.loc[matching_rows.index, 'pl'] = np.nan return merged_df def find_token_id(market_slug, outcome, market_lookup): """Find the token_id based on market_slug and outcome.""" for market in market_lookup.values(): if market['market_slug'] == market_slug: for token in market['tokens']: if token['outcome'].lower() == outcome.lower(): return token['token_id'] return None def fetch_data(url): """Fetch data from a given URL and return the JSON response.""" try: response = requests.get(url, timeout=10) # You can specify a timeout response.raise_for_status() # Raise an error for bad responses (4xx, 5xx) return response.json() except requests.exceptions.RequestException as e: logger.error(f"Error fetching data from URL: {url}. Exception: {e}") return None def fetch_all_pages(api_key, token_ids, market_slug_outcome_map, csv_output_dir='./data/polymarket_trades/'): page = 1 offset = 100 retry_attempts = 0 all_data = [] # Store all data here while True: url = f"https://api.polygonscan.com/api?module=account&action=token1155tx&contractaddress={NEG_RISK_CTF_EXCHANGE}&page={page}&offset={offset}&startblock=0&endblock=99999999&sort=desc&apikey={api_key}" logger.info(f"Fetching transaction data for tokens {token_ids}, page: {page}") data = fetch_data(url) if data and data['status'] == '1': df = pd.DataFrame(data['result']) if df.empty: logger.info("No more transactions found, ending pagination.") break # Exit if there are no more transactions all_data.append(df) page += 1 # Go to the next page else: logger.error(f"API response error or no data found for page {page}") if retry_attempts < 5: retry_attempts += 1 time.sleep(retry_attempts) else: break if all_data: final_df = pd.concat(all_data, ignore_index=True) # Combine all pages logger.info(f"Fetched {len(final_df)} transactions across all pages.") return final_df return None def validate_market_lookup(token_ids, market_lookup): valid_token_ids = [] invalid_token_ids = [] for token_id in token_ids: market_slug, outcome = find_market_info(token_id, market_lookup) if market_slug and outcome: valid_token_ids.append(token_id) else: invalid_token_ids.append(token_id) logger.info(f"Valid token IDs: {valid_token_ids}") if invalid_token_ids: logger.warning(f"Invalid or missing market info for token IDs: {invalid_token_ids}") return valid_token_ids def sanitize_filename(filename): """ Sanitize the filename by removing or replacing invalid characters. """ # Replace invalid characters with an underscore return re.sub(r'[\\/*?:"|]', '_', filename) def sanitize_directory(directory): """ Sanitize the directory name by removing or replacing invalid characters. """ # Replace invalid characters with an underscore return re.sub(r'[\\/*?:"|]', '_', directory) def extract_wallet_ids(leaderboard_url): """Scrape the Polymarket leaderboard to extract wallet IDs.""" logging.info(f"Fetching leaderboard page: {leaderboard_url}") response = requests.get(leaderboard_url) if response.status_code != 200: logging.error(f"Failed to load page {leaderboard_url}, status code: {response.status_code}") return [] logging.debug(f"Page loaded successfully, status code: {response.status_code}") soup = BeautifulSoup(response.content, 'html.parser') logging.debug("Page content parsed with BeautifulSoup") wallet_ids = [] # Debug: Check if tags are being found correctly a_tags = soup.find_all('a', href=True) logging.debug(f"Found {len(a_tags)} tags in the page.") for a_tag in a_tags: href = a_tag['href'] logging.debug(f"Processing href: {href}") if href.startswith('/profile/'): wallet_id = href.split('/')[-1] wallet_ids.append(wallet_id) logging.info(f"Extracted wallet ID: {wallet_id}") else: logging.debug(f"Skipped href: {href}") return wallet_ids def load_market_lookup(json_path): """Load market lookup data from a JSON file.""" with open(json_path, 'r') as json_file: return json.load(json_file) def find_market_info(token_id, market_lookup): """Find market_slug and outcome based on tokenID.""" token_id = str(token_id) # Ensure token_id is a string if not token_id or token_id == 'nan': logger.warning("Token ID is NaN or empty. Skipping lookup.") return None, None logger.debug(f"Looking up market info for tokenID: {token_id}") for market in market_lookup.values(): for token in market['tokens']: if str(token['token_id']) == token_id: logger.debug( f"Found market info for tokenID {token_id}: market_slug = {market['market_slug']}, outcome = {token['outcome']}") return market['market_slug'], token['outcome'] logger.warning(f"No market info found for tokenID: {token_id}") return None, None def fetch_data(url): """Fetch data from a given URL and return the JSON response.""" response = requests.get(url) return response.json() def save_to_csv(filename, data, headers, output_dir): """Save data to a CSV file in the specified output directory.""" filepath = os.path.join(output_dir, filename) with open(filepath, 'w', newline='') as file: writer = csv.DictWriter(file, fieldnames=headers) writer.writeheader() for entry in data: writer.writerow(entry) logger.info(f"Saved data to {filepath}") def add_timestamps(erc1155_df, erc20_df): """ Rename timestamp columns and convert them from UNIX to datetime. """ # Rename the timestamp columns to avoid conflicts during merge erc1155_df.rename(columns={'timeStamp': 'timeStamp_erc1155'}, inplace=True) erc20_df.rename(columns={'timeStamp': 'timeStamp_erc20'}, inplace=True) # Convert UNIX timestamps to datetime format erc1155_df['timeStamp_erc1155'] = pd.to_numeric(erc1155_df['timeStamp_erc1155'], errors='coerce') erc20_df['timeStamp_erc20'] = pd.to_numeric(erc20_df['timeStamp_erc20'], errors='coerce') erc1155_df['timeStamp_erc1155'] = pd.to_datetime(erc1155_df['timeStamp_erc1155'], unit='s', errors='coerce') erc20_df['timeStamp_erc20'] = pd.to_datetime(erc20_df['timeStamp_erc20'], unit='s', errors='coerce') return erc1155_df, erc20_df def enrich_erc1155_data(erc1155_df, market_lookup): """ Enrich the ERC-1155 DataFrame with market_slug and outcome based on market lookup. """ def get_market_info(token_id): if pd.isna(token_id) or str(token_id) == 'nan': return 'Unknown', 'Unknown' for market in market_lookup.values(): for token in market['tokens']: if str(token['token_id']) == str(token_id): return market['market_slug'], token['outcome'] return 'Unknown', 'Unknown' erc1155_df['market_slug'], erc1155_df['outcome'] = zip( *erc1155_df['tokenID'].apply(lambda x: get_market_info(x)) ) return erc1155_df def get_transaction_details_by_hash(transaction_hash, api_key, output_dir='./data/polymarket_trades/'): """ Fetch the transaction details by hash from Polygonscan, parse the logs, and save the flattened data as a CSV. Args: - transaction_hash (str): The hash of the transaction. - api_key (str): The Polygonscan API key. - output_dir (str): The directory to save the CSV file. Returns: - None: Saves the transaction details to a CSV. """ # Ensure output directory exists os.makedirs(output_dir, exist_ok=True) # Construct the API URL for fetching transaction receipt details by hash url = f"https://api.polygonscan.com/api?module=proxy&action=eth_getTransactionReceipt&txhash={transaction_hash}&apikey={api_key}" logger.info(f"Fetching transaction details for hash: {transaction_hash}") logger.debug(f"Request URL: {url}") try: # Fetch transaction details response = requests.get(url) logger.debug(f"Polygonscan API response status: {response.status_code}") if response.status_code != 200: logger.error(f"Non-200 status code received: {response.status_code}") return None # Parse the JSON response data = response.json() logger.debug(f"Response JSON: {data}") # Check if the status is successful if data.get('result') is None: logger.error(f"Error in API response: {data.get('message', 'Unknown error')}") return None # Extract the logs logs = data['result']['logs'] logs_df = pd.json_normalize(logs) # Save the logs to a CSV file for easier review csv_filename = os.path.join(output_dir, f"transaction_logs_{transaction_hash}.csv") logs_df.to_csv(csv_filename, index=False) logger.info(f"Parsed logs saved to {csv_filename}") return logs_df except Exception as e: logger.error(f"Exception occurred while fetching transaction details for hash {transaction_hash}: {e}") return None def add_financial_columns(erc1155_df, erc20_df, wallet_id, market_lookup): """ Merge the ERC-1155 and ERC-20 dataframes, calculate financial columns, including whether a trade was won or lost, and fetch the latest price for each contract and tokenID. """ # Merge the two dataframes on the 'hash' column merged_df = pd.merge(erc1155_df, erc20_df, how='outer', on='hash', suffixes=('_erc1155', '_erc20')) # Convert wallet ID and columns to lowercase for case-insensitive comparison wallet_id = wallet_id.lower() merged_df['to_erc1155'] = merged_df['to_erc1155'].astype(str).str.lower() merged_df['from_erc1155'] = merged_df['from_erc1155'].astype(str).str.lower() # Remove rows where 'tokenID' is NaN or 'nan' merged_df['tokenID'] = merged_df['tokenID'].astype(str) merged_df = merged_df[~merged_df['tokenID'].isnull() & (merged_df['tokenID'] != 'nan')] # Set transaction type based on wallet address merged_df['transaction_type'] = 'other' merged_df.loc[merged_df['to_erc1155'] == wallet_id, 'transaction_type'] = 'buy' merged_df.loc[merged_df['from_erc1155'] == wallet_id, 'transaction_type'] = 'sell' # Calculate the purchase price per token and total dollar value if 'value' in merged_df.columns and 'tokenValue' in merged_df.columns: merged_df['price_paid_per_token'] = merged_df['value'].astype(float) / merged_df['tokenValue'].astype(float) merged_df['total_purchase_value'] = merged_df['value'].astype(float) / 10**6 # USDC has 6 decimal places merged_df['shares'] = merged_df['total_purchase_value'] / merged_df['price_paid_per_token'] else: logger.error("The necessary columns for calculating purchase price are missing.") return merged_df # Create the 'lost' and 'won' columns merged_df['lost'] = ( (merged_df['to_erc1155'] == '0x0000000000000000000000000000000000000000') & (merged_df['transaction_type'] == 'sell') & (merged_df['price_paid_per_token'].isna() | (merged_df['price_paid_per_token'] == 0)) ).astype(int) merged_df['won'] = ( (merged_df['transaction_type'] == 'sell') & (merged_df['price_paid_per_token'] == 1) ).astype(int) merged_df.loc[merged_df['lost'] == 1, 'shares'] = 0 merged_df.loc[merged_df['lost'] == 1, 'total_purchase_value'] = 0 # Fetch live prices and calculate profit/loss (pl) merged_df['tokenID'] = merged_df['tokenID'].astype(str) merged_df = update_latest_prices(merged_df, market_lookup) return merged_df def plot_profit_loss_by_trade(df, user_info): """ Create a bar plot to visualize aggregated Profit/Loss (PL) by trade, with values rounded to two decimal places and formatted as currency. Args: df (DataFrame): DataFrame containing trade data, including 'market_slug', 'outcome', and 'pl'. user_info (dict): Dictionary containing user information, such as username, wallet address, and other relevant details. """ if 'pl' not in df.columns or df['pl'].isnull().all(): logger.warning("No PL data available for plotting. Skipping plot.") return username = user_info.get("username", "Unknown User") wallet_id = user_info.get("wallet_address", "N/A") positions_value = user_info.get("positions_value", "N/A") profit_loss = user_info.get("profit_loss", "N/A") volume_traded = user_info.get("volume_traded", "N/A") markets_traded = user_info.get("markets_traded", "N/A") # Combine market_slug and outcome to create a trade identifier df['trade'] = df['market_slug'] + ' (' + df['outcome'] + ')' # Aggregate the Profit/Loss (pl) for each unique trade aggregated_df = df.groupby('trade', as_index=False).agg({'pl': 'sum'}) # Round PL values to two decimal places aggregated_df['pl'] = aggregated_df['pl'].round(2) # Format the PL values with a dollar sign for display aggregated_df['pl_display'] = aggregated_df['pl'].apply(lambda x: f"${x:,.2f}") # Define a color mapping based on Profit/Loss sign aggregated_df['color'] = aggregated_df['pl'].apply(lambda x: 'green' if x >= 0 else 'red') # Create the plot without using the color axis fig = px.bar( aggregated_df, x='trade', y='pl', title='', labels={'pl': 'Profit/Loss ($)', 'trade': 'Trade (Market Slug / Outcome)'}, text='pl_display', color='color', # Use the color column color_discrete_map={'green': 'green', 'red': 'red'}, ) # Remove the legend if you don't want it fig.update_layout(showlegend=False) # Rotate x-axis labels for better readability and set the main title fig.update_layout( title={ 'text': 'Aggregated Profit/Loss by Trade', 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 24} }, xaxis_tickangle=-45, margin=dict(t=150, l=50, r=50, b=100) ) # Prepare the subtitle text with user information subtitle_text = ( f"Username: {username} | Positions Value: {positions_value} | " f"Profit/Loss: {profit_loss} | Volume Traded: {volume_traded} | " f"Markets Traded: {markets_traded} | Wallet ID: {wallet_id}" ) # Add the subtitle as an annotation fig.add_annotation( text=subtitle_text, xref="paper", yref="paper", x=0.5, y=1.02, xanchor='center', yanchor='top', showarrow=False, font=dict(size=14) ) # Save the plot plot_dir = "./plots/user_trades" os.makedirs(plot_dir, exist_ok=True) sanitized_username = sanitize_filename(username) plot_file = os.path.join(plot_dir, f"{sanitized_username}_aggregated_profit_loss_by_trade.html") fig.write_html(plot_file) logger.info(f"Aggregated Profit/Loss by trade plot saved to {plot_file}") def plot_shares_over_time(df, user_info): """ Create a line plot to visualize the cumulative number of shares for each token over time. Buy orders add to the position, and sell orders subtract from it. Args: df (DataFrame): DataFrame containing trade data, including 'timeStamp_erc1155', 'shares', 'market_slug', 'outcome', and 'transaction_type' ('buy' or 'sell'). user_info (dict): Dictionary containing user information, such as username, wallet address, and other relevant details. """ if 'shares' not in df.columns or df['shares'].isnull().all(): logger.warning("No 'shares' data available for plotting. Skipping plot.") return username = user_info.get("username", "Unknown User") # Ensure 'timeStamp_erc1155' is a datetime type, just in case it needs to be converted if df['timeStamp_erc1155'].dtype != 'datetime64[ns]': df['timeStamp_erc1155'] = pd.to_datetime(df['timeStamp_erc1155'], errors='coerce') # Drop rows with NaN values in 'timeStamp_erc1155', 'shares', 'market_slug', 'outcome', or 'transaction_type' df = df.dropna(subset=['timeStamp_erc1155', 'shares', 'market_slug', 'outcome', 'transaction_type']) # Sort the dataframe by time to ensure the line chart shows the data in chronological order df = df.sort_values(by='timeStamp_erc1155') # Combine 'market_slug' and 'outcome' to create a unique label for each token df['token_label'] = df['market_slug'] + " - " + df['outcome'] # Create a column for 'position_change' which adds shares for buys and subtracts shares for sells based on 'transaction_type' df['position_change'] = df.apply(lambda row: row['shares'] if row['transaction_type'] == 'buy' else -row['shares'], axis=1) # Group by 'token_label' and calculate the cumulative position df['cumulative_position'] = df.groupby('token_label')['position_change'].cumsum() # Forward fill the cumulative position to maintain it between trades df['cumulative_position'] = df.groupby('token_label')['cumulative_position'].ffill() # Create the line plot, grouping by 'token_label' for separate lines per token ID fig = px.line( df, x='timeStamp_erc1155', y='cumulative_position', color='token_label', # This ensures each token ID (market_slug + outcome) gets its own line title=f'Cumulative Shares Over Time for {username}', labels={'timeStamp_erc1155': 'Trade Time', 'cumulative_position': 'Cumulative Position', 'token_label': 'Token (Market Slug - Outcome)'}, line_shape='linear' ) # Update layout for better aesthetics fig.update_layout( title={ 'text': f"Cumulative Number of Shares Over Time for {username}", 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 20} }, margin=dict(t=60), xaxis_title="Trade Time", yaxis_title="Cumulative Number of Shares", legend_title="Token (Market Slug - Outcome)" ) # Save the plot plot_dir = "./plots/user_trades" os.makedirs(plot_dir, exist_ok=True) sanitized_username = sanitize_filename(username) plot_file = os.path.join(plot_dir, f"{sanitized_username}_shares_over_time.html") fig.write_html(plot_file) logger.info(f"Cumulative shares over time plot saved to {plot_file}") def plot_user_trades(df, user_info): """Plot user trades and save plots, adjusting for trades that were lost.""" username = user_info["username"] wallet_id = user_info["wallet_address"] # Sanitize only the filename, not the directory sanitized_username = sanitize_filename(username) info_text = ( f"Username: {username} | Positions Value: {user_info['positions_value']} | " f"Profit/Loss: {user_info['profit_loss']} | Volume Traded: {user_info['volume_traded']} | " f"Markets Traded: {user_info['markets_traded']} | Wallet ID: {wallet_id}" ) # Ensure the directory exists os.makedirs("./plots/user_trades", exist_ok=True) plot_dir = "./plots/user_trades" # Flag loss trades where to_erc1155 is zero address, transaction_type is sell, and price_paid_per_token is NaN df['is_loss'] = df.apply( lambda row: (row['to_erc1155'] == '0x0000000000000000000000000000000000000000') and (row['transaction_type'] == 'sell') and pd.isna(row['price_paid_per_token']), axis=1) # Set shares and total purchase value to zero for loss trades df.loc[df['is_loss'], 'shares'] = 0 df.loc[df['is_loss'], 'total_purchase_value'] = 0 ### Modify for Total Purchase Value by Market (Current holdings) df['total_purchase_value_adjusted'] = df.apply( lambda row: row['total_purchase_value'] if row['transaction_type'] == 'buy' else -row['total_purchase_value'], axis=1 ) grouped_df_value = df.groupby(['market_slug']).agg({ 'total_purchase_value_adjusted': 'sum', 'shares': 'sum', }).reset_index() # Calculate the weighted average price_paid_per_token grouped_df_value['weighted_price_paid_per_token'] = ( grouped_df_value['total_purchase_value_adjusted'] / grouped_df_value['shares'] ) # Sort by total_purchase_value in descending order (ignoring outcome) grouped_df_value = grouped_df_value.sort_values(by='total_purchase_value_adjusted', ascending=False) # Format the label for the bars (removing outcome) grouped_df_value['bar_label'] = ( "Avg Price: $" + grouped_df_value['weighted_price_paid_per_token'].round(2).astype(str) ) fig = px.bar( grouped_df_value, x='market_slug', y='total_purchase_value_adjusted', barmode='group', title=f"Current Total Purchase Value by Market for {username}", labels={'total_purchase_value_adjusted': 'Current Total Purchase Value', 'market_slug': 'Market'}, text=grouped_df_value['bar_label'], hover_data={'weighted_price_paid_per_token': ':.2f'}, ) fig.update_layout( title={ 'text': f"Current Total Purchase Value by Market for {username}", 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 20} }, margin=dict(t=60), showlegend=False # Remove the legend as you requested ) fig.add_annotation( text=info_text, xref="paper", yref="paper", showarrow=False, x=0.5, y=1.05, font=dict(size=12) ) # Save the bar plot as an HTML file plot_file = os.path.join(plot_dir, f"{sanitized_username}_current_market_purchase_value.html") fig.write_html(plot_file) logger.info(f"Current market purchase value plot saved to {plot_file}") ### Modify for Trade Quantity by Market (Current holdings) df['shares_adjusted'] = df.apply( lambda row: row['shares'] if row['transaction_type'] == 'buy' else -row['shares'], axis=1) grouped_df_quantity = df.groupby(['market_slug']).agg({ 'shares_adjusted': 'sum', 'total_purchase_value': 'sum', }).reset_index() # Calculate the weighted average price_paid_per_token grouped_df_quantity['weighted_price_paid_per_token'] = ( grouped_df_quantity['total_purchase_value'] / grouped_df_quantity['shares_adjusted'] ) grouped_df_quantity = grouped_df_quantity.sort_values(by='shares_adjusted', ascending=False) grouped_df_quantity['bar_label'] = ( "Quantity: " + grouped_df_quantity['shares_adjusted'].round().astype(int).astype(str) + "

" + "Avg Price: $" + grouped_df_quantity['weighted_price_paid_per_token'].round(2).astype(str) ) fig = px.bar( grouped_df_quantity, x='market_slug', y='shares_adjusted', barmode='group', title=f"Current Trade Quantity by Market for {username}", labels={'shares_adjusted': 'Current Trade Quantity', 'market_slug': 'Market'}, text=grouped_df_quantity['bar_label'], ) fig.update_layout( title={ 'text': f"Current Trade Quantity by Market for {username}", 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 20} }, margin=dict(t=60), showlegend=False # Remove the legend as you requested ) fig.add_annotation( text=info_text, xref="paper", yref="paper", showarrow=False, x=0.5, y=1.05, font=dict(size=12) ) # Save the trade quantity plot as an HTML file plot_file = os.path.join(plot_dir, f"{sanitized_username}_current_market_trade_quantity.html") fig.write_html(plot_file) logger.info(f"Current market trade quantity plot saved to {plot_file}") ### Modify for Total Purchase Value Timeline df['total_purchase_value_timeline_adjusted'] = df.apply( lambda row: row['total_purchase_value'] if row['transaction_type'] == 'buy' else -row['total_purchase_value'], axis=1 ) # Combine 'market_slug' and 'outcome' into a unique label df['market_outcome_label'] = df['market_slug'] + ' (' + df['outcome'] + ')' # Create the scatter plot, now coloring by 'market_outcome_label' fig = px.scatter( df, x='timeStamp_erc1155', y='total_purchase_value_timeline_adjusted', color='market_outcome_label', # Use the combined label for market and outcome title=f"Total Purchase Value Timeline for {username}", labels={ 'total_purchase_value_timeline_adjusted': 'Total Purchase Value', 'timeStamp_erc1155': 'Transaction Time', 'market_outcome_label': 'Market/Outcome' }, hover_data=['market_slug', 'price_paid_per_token', 'outcome', 'hash'], ) fig.update_layout( title={ 'text': f"Total Purchase Value Timeline for {username}", 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 20} }, margin=dict(t=60) ) fig.add_annotation( text=info_text, xref="paper", yref="paper", showarrow=False, x=0.5, y=1.05, font=dict(size=12) ) # Save the updated plot plot_file = os.path.join(plot_dir, f"{sanitized_username}_total_purchase_value_timeline_adjusted.html") fig.write_html(plot_file) logger.info(f"Total purchase value timeline plot saved to {plot_file}") def plot_total_purchase_value(df, user_info): """Create and save a scatter plot for total purchase value, accounting for buy and sell transactions.""" # Ensure the directory exists os.makedirs("./plots/user_trades", exist_ok=True) plot_dir = "./plots/user_trades" username = user_info["username"] wallet_id = user_info["wallet_address"] # Sanitize only the filename, not the directory sanitized_username = sanitize_filename(username) info_text = ( f"Username: {username} | Positions Value: {user_info['positions_value']} | " f"Profit/Loss: {user_info['profit_loss']} | Volume Traded: {user_info['volume_traded']} | " f"Markets Traded: {user_info['markets_traded']} | Wallet ID: {wallet_id}" ) # Flag loss trades where to_erc1155 is zero address, transaction_type is sell, and price_paid_per_token is NaN df['is_loss'] = df.apply( lambda row: (row['to_erc1155'] == '0x0000000000000000000000000000000000000000') and (row['transaction_type'] == 'sell') and pd.isna(row['price_paid_per_token']), axis=1) # Set shares and total purchase value to zero for loss trades df.loc[df['is_loss'], 'shares'] = 0 df.loc[df['is_loss'], 'total_purchase_value'] = 0 # Adjust the total purchase value based on the transaction type df['total_purchase_value_adjusted'] = df.apply( lambda row: row['total_purchase_value'] if row['transaction_type'] == 'buy' else -row['total_purchase_value'], axis=1 ) # Create the scatter plot for total purchase value over time fig = px.scatter( df, x='timeStamp_erc1155', # Assuming this is the correct timestamp field y='total_purchase_value_adjusted', # Adjusted values for buys and sells color='market_slug', # Use market_slug with outcome as the color title=f"Current Purchase Value Timeline for {username}", # Update title to reflect "current" labels={'total_purchase_value_adjusted': 'Adjusted Purchase Value ($)', 'timeStamp_erc1155': 'Transaction Time'}, hover_data=['market_slug', 'price_paid_per_token', 'outcome', 'hash'], ) # Adjust title positioning and font size fig.update_layout( title={ 'text': f"Current Purchase Value Timeline for {username}", # Update to "Current" 'y': 0.95, 'x': 0.5, 'xanchor': 'center', 'yanchor': 'top', 'font': {'size': 20} }, margin=dict(t=60) ) fig.add_annotation( text=info_text, xref="paper", yref="paper", showarrow=False, x=0.5, y=1.05, font=dict(size=12) ) # Save the scatter plot as an HTML file plot_file = os.path.join(plot_dir, f"{sanitized_username}_current_purchase_value_timeline.html") fig.write_html(plot_file) logger.info(f"Current purchase value timeline plot saved to {plot_file}") def create_and_save_pie_chart(df, user_info): """Create and save a pie chart for user's current holdings.""" # Ensure the directory exists os.makedirs("./plots/user_trades", exist_ok=True) plot_dir = "./plots/user_trades" username = user_info["username"] wallet_id = user_info["wallet_address"] sanitized_username = sanitize_filename(username) info_text = ( f"Username: {username} | Positions Value: {user_info['positions_value']} | " f"Profit/Loss: {user_info['profit_loss']} | Volume Traded: {user_info['volume_traded']} | " f"Markets Traded: {user_info['markets_traded']} | Wallet ID: {wallet_id}" ) # Flag loss trades where to_erc1155 is zero address, transaction_type is sell, and price_paid_per_token is NaN df['is_loss'] = df.apply( lambda row: (row['to_erc1155'] == '0x0000000000000000000000000000000000000000') and (row['transaction_type'] == 'sell') and pd.isna(row['price_paid_per_token']), axis=1) # Set shares and total purchase value to zero for loss trades df.loc[df['is_loss'], 'shares'] = 0 df['shares_adjusted'] = df.apply( lambda row: row['shares'] if row['transaction_type'] == 'buy' else -row['shares'], axis=1) holdings = df.groupby('market_slug').agg({'shares_adjusted': 'sum'}).reset_index() holdings = holdings.sort_values('shares_adjusted', ascending=False) threshold = 0.02 large_slices = holdings[holdings['shares_adjusted'] > holdings['shares_adjusted'].sum() * threshold] small_slices = holdings[holdings['shares_adjusted'] 0: all_data.extend(data['result']) page += 1 else: break # Stop if no more data is returned return pd.DataFrame(all_data) # Fetch ERC-20 transactions with pagination erc20_url = (f"https://api.polygonscan.com/api" f"?module=account" f"&action=tokentx" f"&address={wallet_address}" f"&startblock=0" f"&endblock=99999999" f"&sort=desc" f"&apikey={api_key}") erc20_df = fetch_paginated_data(erc20_url) # Fetch ERC-1155 transactions with pagination erc1155_url = (f"https://api.polygonscan.com/api" f"?module=account" f"&action=token1155tx" f"&address={wallet_address}" f"&startblock=0" f"&endblock=99999999" f"&sort=desc" f"&apikey={api_key}") erc1155_df = fetch_paginated_data(erc1155_url) if not erc20_df.empty and not erc1155_df.empty: return erc20_df, erc1155_df else: return None, None def fetch_wallet_addresses(skip_leaderboard, top_volume, top_profit): """ Fetch wallet addresses based on leaderboard data or manual input. Args: skip_leaderboard (bool): Whether to skip leaderboard fetching. top_volume (bool): Fetch top volume users. top_profit (bool): Fetch top profit users. Returns: list: A list of wallet addresses to process. """ # Manually specified wallet addresses manual_wallet_ids = [ '0x76527252D7FEd00dC4D08d794aFa1cCC36069C2a', # Add more wallet IDs as needed ] if not skip_leaderboard: leaderboard_wallet_ids = call_scrape_wallet_ids(top_volume=top_volume, top_profit=top_profit) wallet_addresses = list(set(manual_wallet_ids + leaderboard_wallet_ids)) # Remove duplicates else: wallet_addresses = manual_wallet_ids return wallet_addresses def main(wallet_addresses=None, skip_leaderboard=False, top_volume=False, top_profit=False, plot=True, latest_price_mode=False): """ Main function to process wallet data and generate plots. Args: wallet_addresses (list): A list of wallet addresses to process (if provided). skip_leaderboard (bool): Whether to skip fetching leaderboard data. top_volume (bool): Whether to fetch top volume users. top_profit (bool): Whether to fetch top profit users. plot (bool): Whether to generate plots for the user data. latest_price_mode (bool): If True, only retrieve the latest prices, no plotting. """ # Load environment variables load_dotenv("keys.env") api_key = os.getenv('POLYGONSCAN_API_KEY') if not wallet_addresses: # Fetch wallet addresses if not provided wallet_addresses = fetch_wallet_addresses(skip_leaderboard, top_volume, top_profit) # Process wallet data and optionally generate plots process_and_plot_user_data(wallet_addresses, api_key, plot=plot, latest_price_mode=latest_price_mode) if __name__ == "__main__": # Use argparse to accept command-line arguments parser = argparse.ArgumentParser(description='Process wallet data for specific wallet addresses.') parser.add_argument( '--wallets', nargs='+', # This will accept multiple wallet IDs help='List of wallet addresses to process.' ) parser.add_argument('--skip-leaderboard', action='store_true', help='Skip leaderboard fetching.') parser.add_argument('--top-volume', action='store_true', help='Fetch top volume users.') parser.add_argument('--top-profit', action='store_true', help='Fetch top profit users.') parser.add_argument('--no-plot', action='store_true', help='Disable plot generation.') parser.add_argument('--latest-price-mode', action='store_true', help='Only retrieve the latest prices, no plotting.') args = parser.parse_args() # Call the main function with the parsed arguments main( wallet_addresses=args.wallets, skip_leaderboard=args.skip_leaderboard, top_volume=args.top_volume, top_profit=args.top_profit, plot=not args.no_plot, latest_price_mode=args.latest_price_mode ) - Arbitrage in Polymarket.com

by JeremyWhittaker

by JeremyWhittakerAbout a month ago, I noticed some arbitrage opportunities on Polymarket.com. However, the liquidity on the platform isn’t significant enough for me to pursue this further. I’m planning to return to stock trading. That said, I still have around $60,000 actively invested in various markets on Polymarket. Most of these trades will expire after the election, so I’ll close them out then. Below, I’ll walk you through these trades and offer some free code for anyone interested in exploring this market.

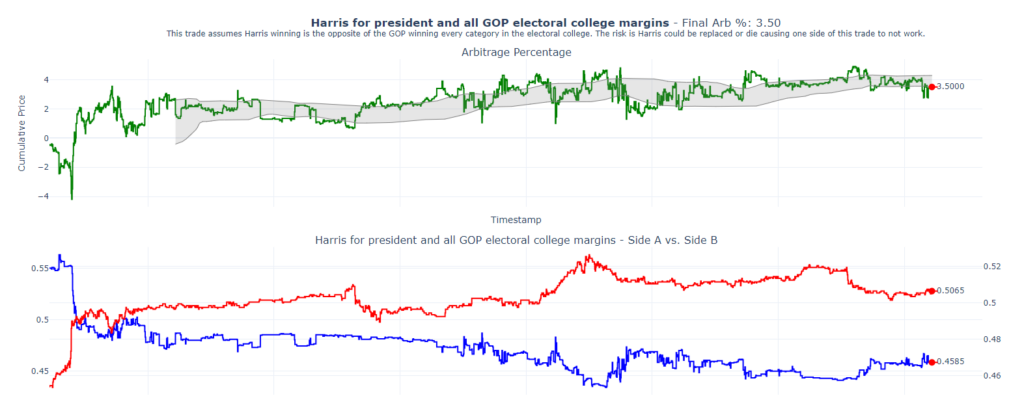

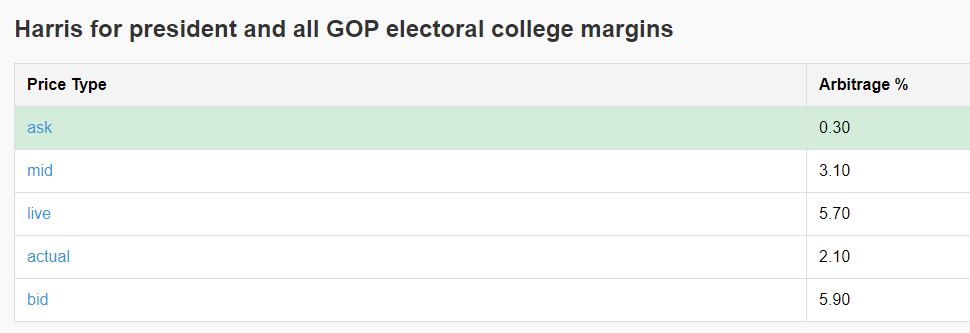

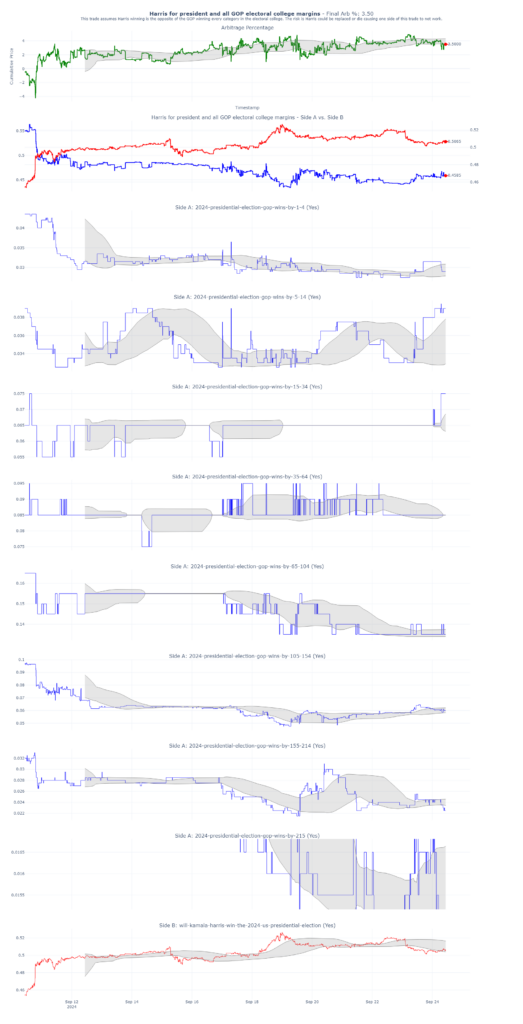

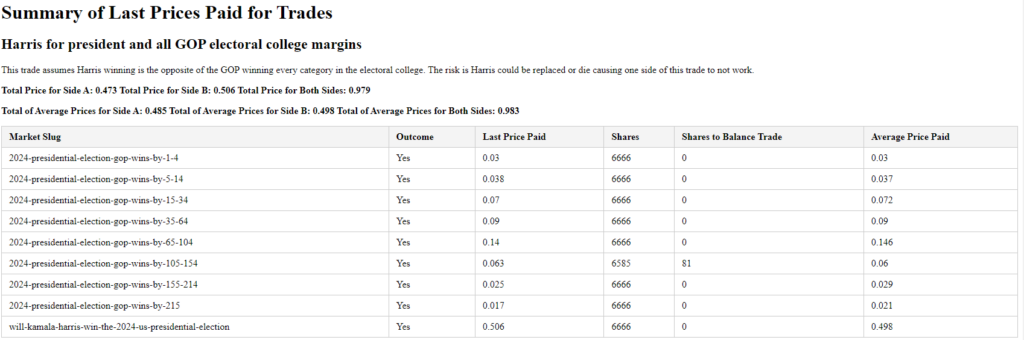

Arb 1: Harris for President and buy all GOP Electoral College Margins

The strategy here is straightforward: We’re betting that Kamala Harris will win the presidency, while simultaneously buying all the GOP Electoral College margins. Essentially, these two positions are opposites. If the total of both bets is less than 1, that difference represents the percentage of guaranteed profit—our arbitrage. As of today, this trade is yielding a 3.5% arbitrage with 41 days remaining until the election. That translates to a ~41% annualized return.

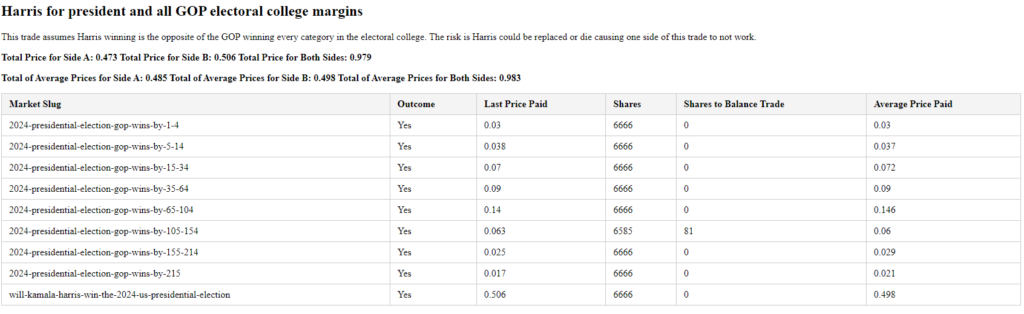

Below, you’ll find a plot that shows how I’ve structured my trades. You can see I’ve bought nearly equal amounts of shares in all possible outcomes.

Here are my trades on Polymarket.com. You can see I’ve essentially bought the same number of shares of each of possible outcomes.

Here’s a summary of my trades placed live on Polymarket. The average cost of these positions is 0.983, meaning my expected return is 1 – 0.983, or 1.7%. My last trade had a cost basis of 0.979, yielding a 2.1% return.

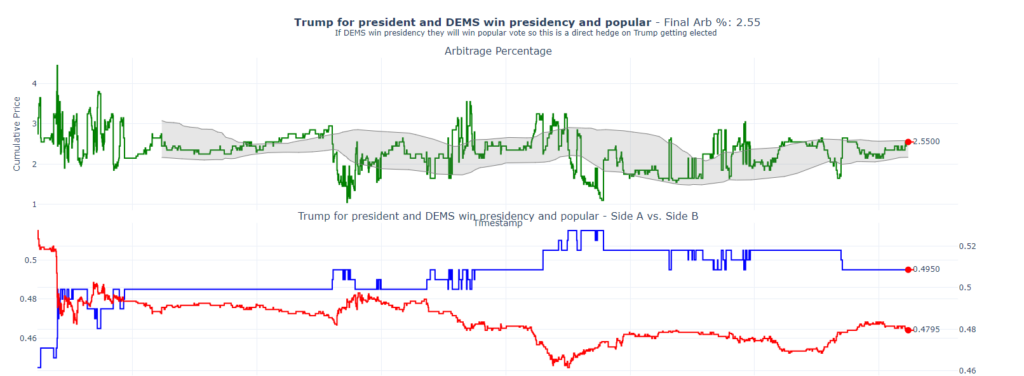

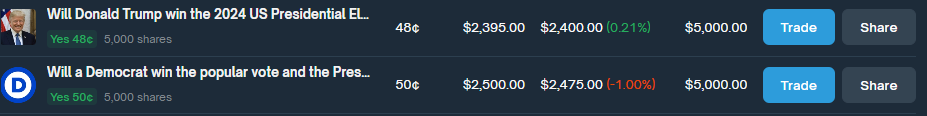

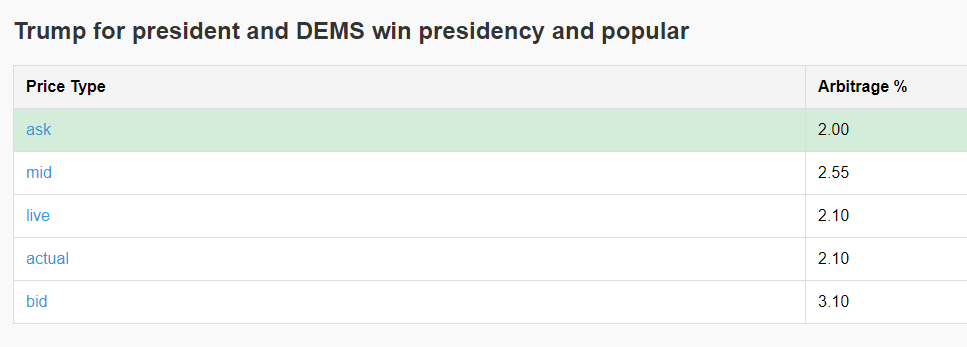

Arb 2: Trump for President hedged with DEMS to win the popular vote and presidency

This strategy is showing a 2.55% arbitrage. In this scenario, we’re betting on Trump to win, while hedging by betting that the Democrats will win both the popular vote and the presidency. While this is not a perfect hedge (since it’s possible for the Democrats to win the presidency without the popular vote), based on my modeling, this scenario is highly unlikely. Therefore, I consider this hedge to be sound.

Below are my actual trades, demonstrating that I have an equal number of shares for each side of the bet.

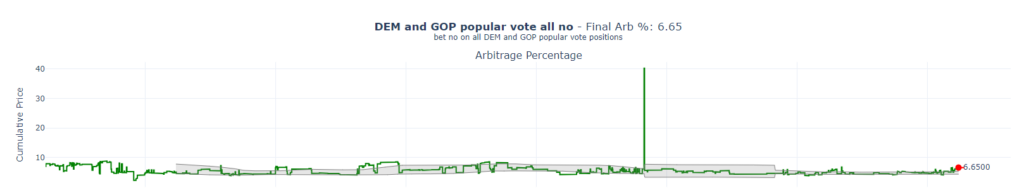

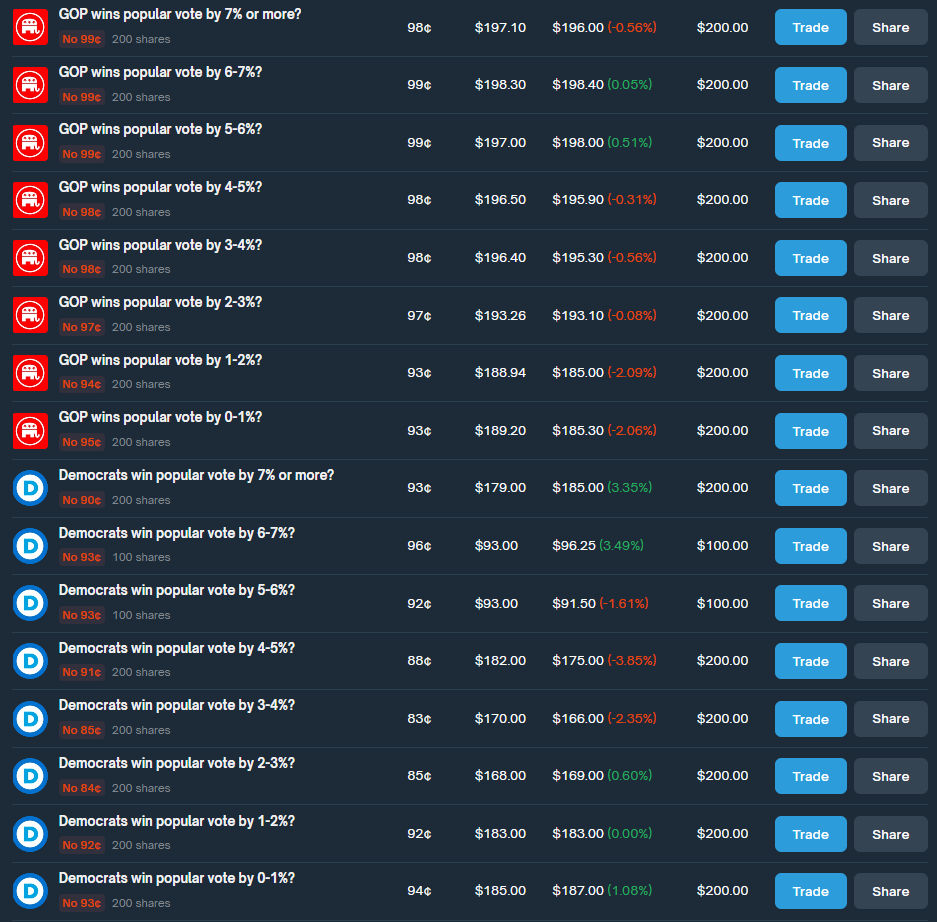

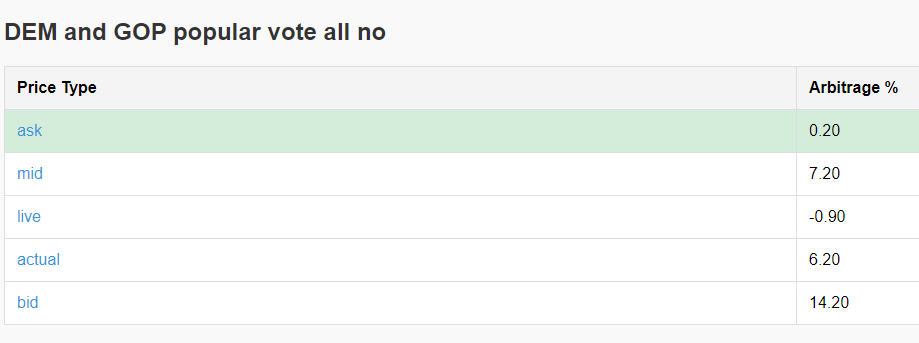

Arb 3: Buy all the no outcomes for DEM and GOP popular vote

For this trade, I’ve bought the “No” outcome on every possible bet for the popular vote. This currently presents a 6.65% arbitrage opportunity.

Here are the actual trades. As you can see, all but one of these bets will win on election day. The total profit from the winning trades, minus the loss from the single losing trade, results in the overall return.

Here are my actual trades. All of these except 1 will win on election day. So the winning side of all these trades less 1 needs to be greater than the amount lost on the losing trade.

Last arb:

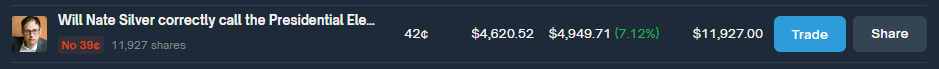

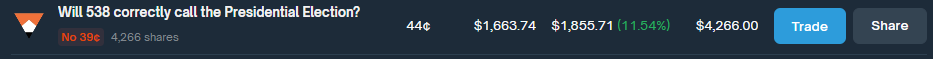

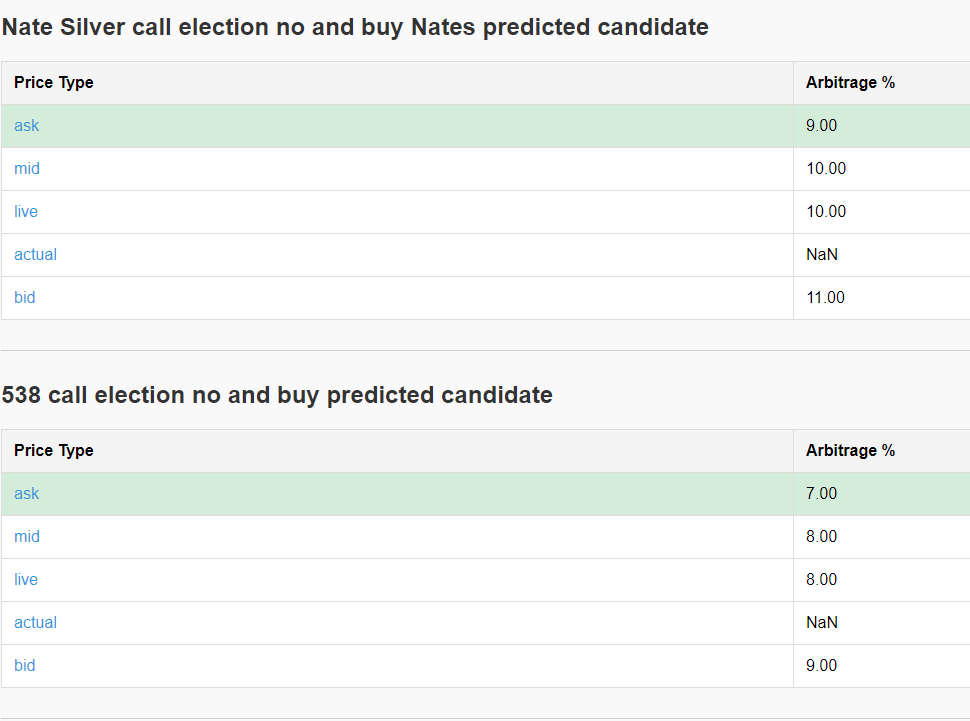

I wrote extensively about betting against Nate Silver and 538 predictions in an older blog post here. This goes in-depth on how I will trade these two markets.

I’ve written in-depth about my strategy of betting against Nate Silver and the predictions from FiveThirtyEight in a previous blog post, which you can find here. That post details how I plan to trade these two markets.

Read the Rules Carefully

One important tip: Always read the rules of each trade carefully. Some positions may seem like arbitrage opportunities, but they can carry hidden risks. For example, if a candidate were to be assassinated, you could lose all your money, despite thinking you had a safe arbitrage position.

Spread Matters

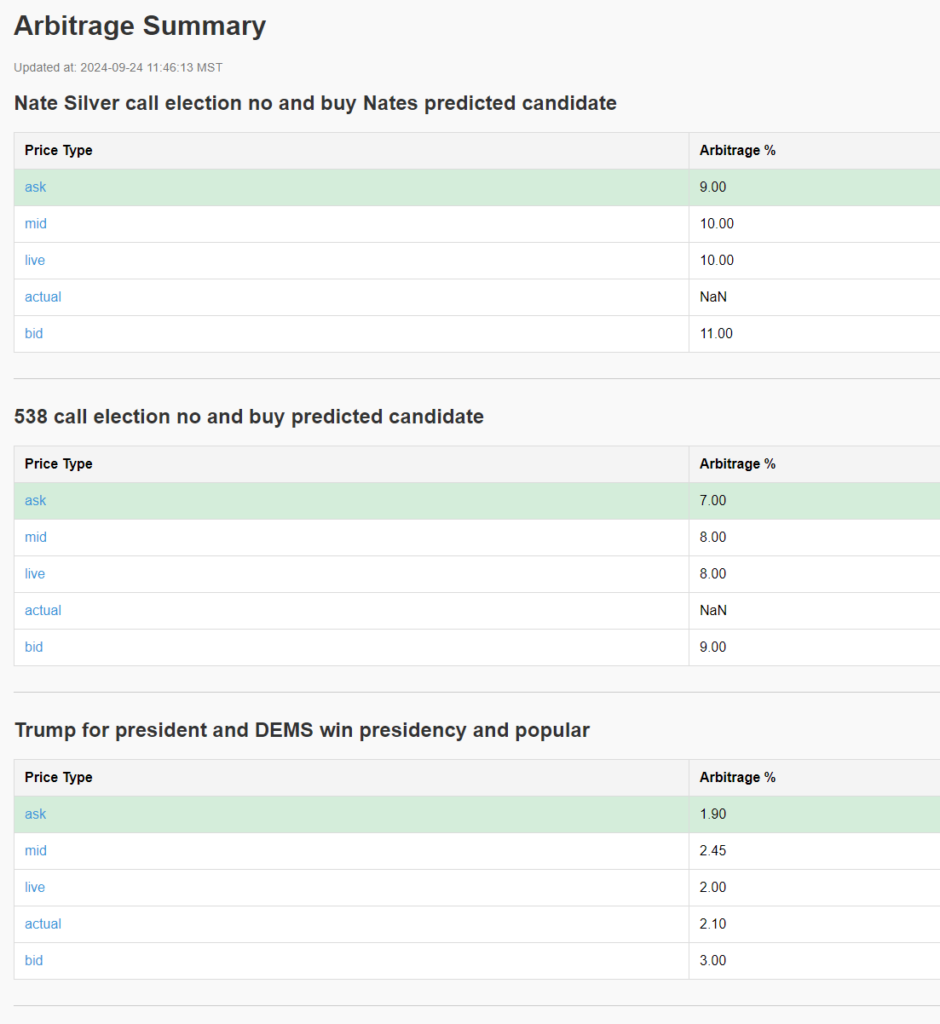

One of the biggest challenges I’ve encountered is market impact. When I place a trade, I often move the entire market in my direction due to the low liquidity. This creates discrepancies between the bid, ask, mid, live, and actual trade prices. Below are examples of this from the trades I shared earlier.

The Code:

I spent a tremendous amount of time writing code to break down Polymarkets data.

strategies.py

This file contains all of the hedge opportunities that I’m monitoring.

trades = [ { "trade_name": "Harris for president and all GOP electoral college margins", "subtitle": "This trade assumes Harris winning is the opposite of the GOP winning every category " "in the electoral college. The risk is Harris could be replaced or die causing one " "side of this trade to not work.", "side_a_trades": [ ("2024-presidential-election-gop-wins-by-1-4", "Yes"), ("2024-presidential-election-gop-wins-by-5-14", "Yes"), ("2024-presidential-election-gop-wins-by-15-34", "Yes"), ("2024-presidential-election-gop-wins-by-35-64", "Yes"), ("2024-presidential-election-gop-wins-by-65-104", "Yes"), ("2024-presidential-election-gop-wins-by-105-154", "Yes"), ("2024-presidential-election-gop-wins-by-155-214", "Yes"), ("2024-presidential-election-gop-wins-by-215", "Yes"), ], "side_b_trades": [ ("will-kamala-harris-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, { "trade_name": "DEM and REP electoral college all no", "subtitle": "bet no on all DEM and REP electoral college positions", "positions": [ ("2024-presidential-election-gop-wins-by-215", "No"), ("2024-presidential-election-gop-wins-by-155-214", "No"), ("2024-presidential-election-gop-wins-by-65-104", "No"), ("2024-presidential-election-gop-wins-by-35-64", "No"), ("2024-presidential-election-gop-wins-by-15-34", "No"), ("2024-presidential-election-gop-wins-by-1-4", "No"), ("2024-presidential-election-gop-wins-by-5-14", "No"), ("2024-presidential-election-gop-wins-by-105-154", "No"), ("2024-presidential-election-democrats-win-by-0-4", "No"), ("2024-presidential-election-democrats-win-by-5-14", "No"), ("2024-presidential-election-democrats-win-by-15-34", "No"), ("2024-presidential-election-democrats-win-by-35-64", "No"), ("2024-presidential-election-democrats-win-by-65-104", "No"), ("2024-presidential-election-democrats-win-by-105-154", "No"), ("2024-presidential-election-democrats-win-by-155-214", "No"), ("2024-presidential-election-democrats-win-by-215", "No"), ], "method": "all_no" }, { "trade_name": "DEM and GOP popular vote all no", "subtitle": "bet no on all DEM and GOP popular vote positions", "positions": [ ("gop-wins-popular-vote-by-more-than-7", "No"), ("gop-wins-popular-vote-by-6-7", "No"), ("gop-wins-popular-vote-by-5-6", "No"), ("gop-wins-popular-vote-by-4-5", "No"), ("gop-wins-popular-vote-by-3-4", "No"), ("gop-wins-popular-vote-by-2-3", "No"), ("gop-wins-popular-vote-by-1-2", "No"), ("gop-wins-popular-vote-by-0-1", "No"), ("democrats-win-popular-vote-by-over-7", "No"), ("democrats-win-popular-vote-by-6-7", "No"), ("democrats-win-popular-vote-by-5-6", "No"), ("democrats-win-popular-vote-by-4-5", "No"), ("democrats-win-popular-vote-by-3-4", "No"), ("democrats-win-popular-vote-by-2-3", "No"), ("democrats-win-popular-vote-by-1-2", "No"), ("democrats-win-popular-vote-by-0-1", "No"), ], "method": "all_no" }, { "trade_name": "" "Trump for president and DEMS win presidency and popular", "subtitle": "If DEMS win presidency they will win popular vote so this is a direct hedge " "on Trump getting elected ", "side_a_trades": [ ("will-a-democrat-win-the-popular-vote-and-the-presidency", "Yes"), ], "side_b_trades": [ ("will-donald-trump-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, { "trade_name": "" "DEM win presidency hedged on Trump", "subtitle": "REP win presidency hedged on Kamala winning", "side_a_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Democratic"), ], "side_b_trades": [ ("will-donald-trump-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, { "trade_name": "" "REP win presidency hedged on Kamala", "subtitle": "REP win presidency hedged on Kamala winning", "side_a_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Republican"), ], "side_b_trades": [ ("will-kamala-harris-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, { "trade_name": "" "Trump popular vote hedged with popular vote margins", "subtitle": "Trump wins popular vote and then buy all the margins for DEMS on the popular vote", "side_a_trades": [ ("will-donald-trump-win-the-popular-vote-in-the-2024-presidential-election", "Yes"), ], "side_b_trades": [ ("democrats-win-popular-vote-by-0-1", "Yes"), ("democrats-win-popular-vote-by-1-2", "Yes"), ("democrats-win-popular-vote-by-2-3", "Yes"), ("democrats-win-popular-vote-by-3-4", "Yes"), ("democrats-win-popular-vote-by-4-5", "Yes"), ("democrats-win-popular-vote-by-5-6", "Yes"), ("democrats-win-popular-vote-by-6-7", "Yes"), ("democrats-win-popular-vote-by-over-7", "Yes"), ], "method": "balanced" }, { "trade_name": "" "Kamala popular vote hedged with popular vote margins", "subtitle": "Kamala wins popular vote and then buy all the margins for REP on the popular vote", "side_a_trades": [ ("will-kamala-harris-win-the-popular-vote-in-the-2024-presidential-election", "Yes"), ], "side_b_trades": [ ("gop-wins-popular-vote-by-0-1", "Yes"), ("gop-wins-popular-vote-by-1-2", "Yes"), ("gop-wins-popular-vote-by-2-3", "Yes"), ("gop-wins-popular-vote-by-3-4", "Yes"), ("gop-wins-popular-vote-by-4-5", "Yes"), ("gop-wins-popular-vote-by-5-6", "Yes"), ("gop-wins-popular-vote-by-6-7", "Yes"), ("gop-wins-popular-vote-by-more-than-7", "Yes"), ], "method": "balanced" }, { "trade_name": "DEM popular vote all no", "subtitle": "bet no on all DEM popular vote positions", "positions": [ ("democrats-win-popular-vote-by-over-7", "No"), ("democrats-win-popular-vote-by-6-7", "No"), ("democrats-win-popular-vote-by-5-6", "No"), ("democrats-win-popular-vote-by-4-5", "No"), ("democrats-win-popular-vote-by-3-4", "No"), ("democrats-win-popular-vote-by-2-3", "No"), ("democrats-win-popular-vote-by-1-2", "No"), ("democrats-win-popular-vote-by-0-1", "No"), ], "method": "all_no" }, { "trade_name": "GOP popular vote all no", "subtitle": "bet no on all GOP popular vote positions", "positions": [ ("gop-wins-popular-vote-by-more-than-7", "No"), ("gop-wins-popular-vote-by-6-7", "No"), ("gop-wins-popular-vote-by-5-6", "No"), ("gop-wins-popular-vote-by-4-5", "No"), ("gop-wins-popular-vote-by-3-4", "No"), ("gop-wins-popular-vote-by-2-3", "No"), ("gop-wins-popular-vote-by-1-2", "No"), ("gop-wins-popular-vote-by-0-1", "No"), ], "method": "all_no" }, { "trade_name": "Trump for president and all DEM electoral college margins", "subtitle" : "This trade assumes Trump winning is the opposite of the DEM winning every category " "in the electoral college. The risk is Trump could be replaced or die causing one " "side of this trade to not work.", "side_a_trades": [ ("2024-presidential-election-democrats-win-by-0-4", "Yes"), ("2024-presidential-election-democrats-win-by-5-14", "Yes"), ("2024-presidential-election-democrats-win-by-15-34", "Yes"), ("2024-presidential-election-democrats-win-by-35-64", "Yes"), ("2024-presidential-election-democrats-win-by-65-104", "Yes"), ("2024-presidential-election-democrats-win-by-105-154", "Yes"), ("2024-presidential-election-democrats-win-by-155-214", "Yes"), ("2024-presidential-election-democrats-win-by-215", "Yes"), ], "side_b_trades": [ ("will-donald-trump-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, { "trade_name": "Electoral College All GOP ALL DEM YES", "subtitle": "This is a truely hedged trade. Bet all REP electoral college slots and bet all" "DEM electoral college slots", "side_a_trades": [ ("2024-presidential-election-gop-wins-by-1-4", "Yes"), ("2024-presidential-election-gop-wins-by-5-14", "Yes"), ("2024-presidential-election-gop-wins-by-15-34", "Yes"), ("2024-presidential-election-gop-wins-by-35-64", "Yes"), ("2024-presidential-election-gop-wins-by-65-104", "Yes"), ("2024-presidential-election-gop-wins-by-105-154", "Yes"), ("2024-presidential-election-gop-wins-by-155-214", "Yes"), ("2024-presidential-election-gop-wins-by-215", "Yes"), ], "side_b_trades": [ ("2024-presidential-election-democrats-win-by-0-4", "Yes"), ("2024-presidential-election-democrats-win-by-5-14", "Yes"), ("2024-presidential-election-democrats-win-by-15-34", "Yes"), ("2024-presidential-election-democrats-win-by-35-64", "Yes"), ("2024-presidential-election-democrats-win-by-65-104", "Yes"), ("2024-presidential-election-democrats-win-by-105-154", "Yes"), ("2024-presidential-election-democrats-win-by-155-214", "Yes"), ("2024-presidential-election-democrats-win-by-215", "Yes"), ], "method": "balanced" }, { "trade_name": "DEM electoral college all no", "subtitle": "bet no on all DEM electoral college positions", "positions": [ ("2024-presidential-election-democrats-win-by-0-4", "No"), ("2024-presidential-election-democrats-win-by-5-14", "No"), ("2024-presidential-election-democrats-win-by-15-34", "No"), ("2024-presidential-election-democrats-win-by-35-64", "No"), ("2024-presidential-election-democrats-win-by-65-104", "No"), ("2024-presidential-election-democrats-win-by-105-154", "No"), ("2024-presidential-election-democrats-win-by-155-214", "No"), ("2024-presidential-election-democrats-win-by-215", "No"), ], "method": "all_no" }, { "trade_name": "REP electoral college all no", "subtitle": "bet no on all REP electoral college positions", "positions": [ ("2024-presidential-election-gop-wins-by-1-4", "No"), ("2024-presidential-election-gop-wins-by-5-14", "No"), ("2024-presidential-election-gop-wins-by-15-34", "No"), ("2024-presidential-election-gop-wins-by-35-64", "No"), ("2024-presidential-election-gop-wins-by-65-104", "No"), ("2024-presidential-election-gop-wins-by-105-154", "No"), ("2024-presidential-election-gop-wins-by-155-214", "No"), ("2024-presidential-election-gop-wins-by-215", "No"), ], "method": "all_no" }, { "trade_name": "FED Rates in Sept all no", "subtitle": "bet no on all FED possibilities in Sept", "positions": [ ("fed-decreases-interest-rates-by-50-bps-after-september-2024-meeting", "No"), ("fed-decreases-interest-rates-by-25-bps-after-september-2024-meeting", "No"), ("no-change-in-fed-interest-rates-after-2024-september-meeting", "No"), ], "method": "all_no" }, { "trade_name": "Balance of power all no", "subtitle": "bet no on some of the balance of power outcomes", "positions": [ ("2024-balance-of-power-r-prez-r-senate-r-house", "No"), ("2024-election-democratic-presidency-and-house-republican-senate", "No"), ("democratic-sweep-in-2024-election", "No"), ("2024-balance-of-power-republican-presidency-and-senate-democratic-house", "No"), ], "method": "all_no" }, { "trade_name": "Presidential party D President Trump", "subtitle": "Bet on the candidate to win and their party to lose hedging the bet", "side_a_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Democratic"), ], "side_b_trades": [ ("will-kamala-harris-win-the-2024-us-presidential-election", "No"), ], "method": "balanced" }, { "trade_name": "Presidential party R President Harris", "subtitle": "Bet on the candidate to win and their party to lose hedging the bet", "side_a_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Republican"), ], "side_b_trades": [ ("will-kamala-harris-win-the-2024-us-presidential-election", "Yes"), ], "method": "balanced" }, # { # "trade_name": "Trump Vance ticket and vance replaced", # "subtitle": "Betting on the trump and vance ticket as well as vanced replaced", # "side_a_trades": [ # ("will-trump-vance-be-gop-ticket-on-election-day", "Yes"), # ], # "side_b_trades": [ # ("jd-vance-steps-down-as-republican-vp-nominee", "Yes"), # # ], # "method": "balanced" # }, { "trade_name": "Other DEM wins election no and DEM wins election Yes", "subtitle": "Taking trade on other dem besides biden to win but then saying DEMS win Presidency", "side_a_trades": [ ("democrat-other-than-biden-wins-the-presidential-election", "No"), ], "side_b_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Democratic"), ], "method": "balanced" }, { "trade_name": "538 call election no and buy predicted candidate", "subtitle": "538 will probably call the election correctly as indicated by the numbers. But if the day before" "the election as long as you buy the same number of shares as the winning candidate cheaper than" "the inverse of the price you paid for these shares you will profit", "side_a_trades": [ ("will-538-correctly-call-the-presidential-election", "No"), ], "side_b_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Republican"), ], "method": "balanced" }, { "trade_name": "Nate Silver call election no and buy Nates predicted candidate", "subtitle": "Nate Silver will probably call the election correctly as indicated by the numbers. But if the day before" "the election as long as you buy the same number of shares as the winning candidate cheaper than" "the inverse of the price you paid for these shares you will profit", "side_a_trades": [ ("will-nate-silver-correctly-call-the-presidential-election", "No"), ], "side_b_trades": [ ("which-party-will-win-the-2024-united-states-presidential-election", "Republican"), ], "method": "balanced" }, # { # "trade_name": "DEM solid red No", # "subtitle": "The main state the DEMS have a chance in is OH. So the opposite of DEMS winning a" # "solid red state, no is the DEMS actually winning the most probable state OH(10.5%)" # ". All of the rest of these states carry a < 10% chance", # "side_a_trades": [ # ("us-presidential-election-democrats-win-a-solid-red-state", "No"), # ], # "side_b_trades": # [ # # ("will-a-democrat-win-alabama-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-alaska-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-arkansas-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-idaho-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-indiana-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-iowa-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-kansas-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-kentucky-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-louisiana-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-mississippi-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-missouri-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-montana-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-nebraska-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-north-dakota-in-the-2024-us-presidential-election", "Yes"), # ("will-a-democrat-win-ohio-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-oklahoma-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-south-carolina-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-south-dakota-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-tennessee-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-utah-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-west-virginia-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-democrat-win-wyoming-in-the-2024-us-presidential-election", "Yes") # ], # "method": "balanced" # # }, # { # "trade_name": "REP solid blue No", # "subtitle": "The main state the REPS have a chance in is VA. So the opposite of REPS winning a" # "solid blue state, no is the REPS actually winning the most probable state VA(15%). All" # "of the rest of these states carry a < 10% chance", # "side_a_trades": [ # ("presidential-election-republicans-win-a-solid-blue-state", "No"), # ], # "side_b_trades": # [ # # ("will-a-republican-win-california-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-colorado-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-connecticut-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-delaware-presidential-election", "Yes"), # # ("will-a-republican-win-hawaii-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-illinois-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-maryland-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-massachusetts-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-new-jersey-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-new-mexico-presidential-election", "Yes"), # # ("will-a-republican-win-new-york-presidential-election", "Yes"), # # ("will-a-republican-win-oregon-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-rhode-island-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-republican-win-vermont-in-the-2024-us-presidential-election", "Yes"), # ("will-a-republican-win-virginia-in-the-2024-us-presidential-election", "Yes"), # # ("will-a-reoublican-win-washington-in-the-2024-us-presidential-election", "Yes") # ], # "method": "balanced" # # }, # { # "trade_name": "Trump wins every swing state yes", # "subtitle": "This trade bets that trump will win every swing state(cheap). Then it bets that " # "harris will win MI(swing state). Harris is most favored to win MI 46.5/43.6 by " # "Nate Silver and 61% on Polymarket. The theory here is if Trump wins MI then he" # "should also win the other swing states given that this is the largest margin state.", # "side_a_trades": [ # ("trump-wins-every-swing-state", "Yes"), # ], # "side_b_trades": [ # ("will-a-democrat-win-arizona-presidential-election", "Yes"), # # ("will-a-democrat-win-georgia-presidential-election", "Yes"), # ("will-a-democrat-win-michigan-presidential-election", "Yes"), # # ("will-a-democrat-win-nevada-presidential-election", "Yes"), # # ("will-a-democrat-win-north-carolina-presidential-election", "Yes"), # # ("will-a-democrat-win-pennsylvania-presidential-election", "Yes"), # # ("will-a-democrat-win-wisconsin-presidential-election", "Yes"), # # ], # "method": "balanced" # # }, # { # "trade_name": "Harris wins every swing state yes", # "subtitle": "This trade bets that Harris will win every swing state(cheap). Then it bets that " # "Trump will win GA(swing state). Trump is most favored to win GA 46.8/45.3 by " # "Nate Silver and 61% on Polymarket. The theory here is if Harris wins GA then she" # "should also win the other swing states given that this is the largest margin state.", "side_a_trades": [ # ("will-kamala-harris-win-every-swing-state", "Yes"), # ], # "side_b_trades": [ # # ("will-a-democrat-win-arizona-presidential-election", "No"), # ("will-a-democrat-win-georgia-presidential-election", "No"), # # ("will-a-democrat-win-michigan-presidential-election", "No"), # # ("will-a-democrat-win-nevada-presidential-election", "No"), # # ("will-a-democrat-win-pennsylvania-presidential-election", "No"), # # ("will-a-democrat-win-wisconsin-presidential-election", "No"), # ], # "method": "balanced" # # }, # # { # "trade_name": "REP flip Biden State", # "subtitle": "test", # "side_a_trades": [ # ("republicans-flip-a-2020-biden-state", "No"), # ], # "side_b_trades": [ # ("will-a-democrat-win-georgia-presidential-election", "No"), # ], # "method": "balanced" # # }, # { # "trade_name": "DEM flip Trump State", # "subtitle": "test", # "side_a_trades": [ # ("dems-flip-a-2020-trump-state", "No"), # ], # "side_b_trades": [ # ("will-a-democrat-win-north-carolina-presidential-election", "Yes"), # ], # "method": "balanced" # }, ]get_market_book_and_live_arb.py

This program will take your strategies and generate a nice HTML file that will constantly update the arbitrage opportunity. The output looks like this.

import os import sys import json import pandas as pd import logging import time from datetime import datetime import pytz from py_clob_client.client import ClobClient from strategies import trades from get_order_book import update_books_for_trades # Import the function from dotenv import load_dotenv import numpy as np from get_live_price import get_live_price # Import the new live price function import jinja2 import tempfile import numpy as np import subprocess # Access the environment variables api_key = os.getenv('API_KEY') # Set up logging configuration logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s') # Replace with your actual host and chain ID host = "https://clob.polymarket.com" chain_id = 137 # Polygon Mainnet # Initialize the ClobClient client = ClobClient(host, key=api_key, chain_id=chain_id) # Dictionary to cache live prices live_price_cache = {} # Load environment variables load_dotenv() def load_market_lookup(): with open('./data/market_lookup.json', 'r') as f: market_lookup = json.load(f) slug_to_token_id = {} for market in market_lookup.values(): slug = market['market_slug'] slug_to_token_id[slug] = {token['outcome']: token['token_id'] for token in market['tokens']} return slug_to_token_id def get_actual_price(slug, outcome, user_id='JeremyRWhittaker'): """ Get the actual price and size from the user's latest trade for the specified slug and outcome. """ file_path = f'./data/user_trades/{user_id}_enriched_transactions.parquet' if not os.path.exists(file_path): logging.warning(f"User trades file not found: {file_path}") return None, None try: df = pd.read_parquet(file_path) except Exception as e: logging.error(f"Failed to read user trades file: {file_path}. Error: {e}") return None, None # Parse 'timeStamp_erc1155' as datetime try: df['timeStamp_erc1155'] = pd.to_datetime(df['timeStamp_erc1155']) except Exception as e: logging.error(f"Failed to parse 'timeStamp_erc1155' as datetime: {e}") return None, None # Filter by market_slug and outcome df_filtered = df[(df['market_slug'] == slug) & (df['outcome'] == outcome)] if df_filtered.empty: logging.info(f"No trades found for user {user_id} in market {slug} ({outcome})") return None, None # Get the row with the latest 'timeStamp_erc1155' latest_trade = df_filtered.loc[df_filtered['timeStamp_erc1155'].idxmax()] price = latest_trade['price_paid_per_token'] size = latest_trade['shares'] return price, size def get_price_and_size(df, price_type): if price_type == 'ask': relevant_df = df[df['side'] == 'ask'] if not relevant_df.empty: min_price_row = relevant_df.loc[relevant_df['price'].idxmin()] return min_price_row['price'], min_price_row['size'] elif price_type == 'bid': relevant_df = df[df['side'] == 'bid'] if not relevant_df.empty: max_price_row = relevant_df.loc[relevant_df['price'].idxmax()] return max_price_row['price'], max_price_row['size'] elif price_type == 'mid': ask_df = df[df['side'] == 'ask'] bid_df = df[df['side'] == 'bid'] if not ask_df.empty and not bid_df.empty: min_ask_price = ask_df['price'].min() max_bid_price = bid_df['price'].max() return (min_ask_price + max_bid_price) / 2, None return None, None def get_live_price(token_id, side): cache_key = f"{token_id}_{side.upper()}" current_time = time.time() # Get the current time in seconds since the Epoch # Check if the price is in the cache and if it's still valid (not older than 2 minutes) if cache_key in live_price_cache: cached_price, timestamp = live_price_cache[cache_key] if current_time - timestamp 0: profit = 1 - total_cost arb_pct = profit * 100 logging.info(f"Total cost: {total_cost:.4f}, profit: {profit:.4f}, arb: {arb_pct:.4f}%") arbitrage_per_price_type[price_type] = arb_pct else: logging.warning(f"Data incomplete for trade: {trade_name}, setting arbitrage to NaN for {price_type}.") arbitrage_per_price_type[price_type] = np.nan if arbitrage_per_price_type: arbitrage_info[trade_name] = arbitrage_per_price_type logging.info(f"\nArbitrage opportunity for {trade_name}: {arbitrage_info[trade_name]}") else: logging.info(f"No arbitrage opportunities found for {trade_name}.") return arbitrage_info def get_spread_from_api(slug, outcome, slug_to_token_id): token_id = slug_to_token_id.get(slug, {}).get(outcome) if not token_id: logging.warning(f"Token ID not found for {slug} ({outcome})") return None try: spread_info = client.get_spread(token_id=token_id) if spread_info and 'spread' in spread_info: try: spread_value = float(spread_info['spread']) return spread_value except ValueError: logging.error(f"Spread value is not a float: {spread_info['spread']}") return None except Exception as e: logging.error(f"Failed to fetch spread for token {token_id}: {str(e)}") return None def process_all_trades(trades, output_dir='./strategies', include_bid=True): """ Process all trades, saving the results to CSV and HTML. """ if not os.path.exists(output_dir): os.makedirs(output_dir) # Default user ID user_id = 'JeremyRWhittaker' # Run get_user_trade_prices.py as a subprocess with --run-once try: logging.info(f"Updating user trades for user: {user_id}") subprocess.run( ['python', 'get_user_trade_prices.py', user_id, './data/strategies.py'], check=True ) logging.info(f"Successfully updated user trades for user: {user_id}") except subprocess.CalledProcessError as e: logging.error(f"Error updating user trades: {e}") return # Exit the function if updating trades is critical if not os.path.exists(output_dir): os.makedirs(output_dir) slug_to_token_id = load_market_lookup() arbitrage_info = {} datasets = {} spread_info = {} trade_descriptions = {} # Determine the price types based on the include_bid flag price_types = ['ask', 'mid', 'live', 'actual'] if include_bid: price_types.append('bid') user_id = 'JeremyRWhittaker' # Default user ID for trade in trades: trade_name = trade['trade_name'] trade_descriptions[trade_name] = trade.get('description', '') # Store the description trade_datasets = {} trade_spreads = {} if trade['method'] == 'all_no': for price_type in price_types: save_trade_details_with_prices(trade, ['positions'], price_type, output_dir, slug_to_token_id, user_id) # Load and store dataset dataset_path = os.path.join(output_dir, f"{trade_name}_{price_type}.csv") if os.path.exists(dataset_path): trade_datasets[price_type] = pd.read_csv(dataset_path) elif trade['method'] == 'balanced': for price_type in price_types: save_trade_details_with_prices(trade, ['side_a_trades', 'side_b_trades'], price_type, output_dir, slug_to_token_id, user_id) # Load and store dataset dataset_path = os.path.join(output_dir, f"{trade_name}_{price_type}.csv") if os.path.exists(dataset_path): trade_datasets[price_type] = pd.read_csv(dataset_path) # Get the spread for the trade using the token ID for side in ['positions', 'side_a_trades', 'side_b_trades']: trade_sides = trade.get(side, []) for slug, outcome in trade_sides: try: logging.info(f"Processing trade side {side} for slug: {slug} and outcome: {outcome}") token_id = slug_to_token_id.get(slug, {}).get(outcome) if token_id: logging.info(f"Token ID found for {slug} ({outcome}): {token_id}") spread = get_spread_from_api(slug, outcome, slug_to_token_id) if spread is not None: trade_spreads[slug] = spread logging.info(f"Spread for {slug} ({outcome}): {spread}") else: logging.warning(f"No spread found for {slug} ({outcome})") else: logging.warning(f"Token ID not found for {slug} ({outcome})") except Exception as e: logging.error(f"Error processing trade side {side} for {slug} ({outcome}): {e}", exc_info=True) # Store the datasets and spreads try: logging.info(f"Storing datasets and spread information for trade: {trade_name}") datasets[trade_name] = trade_datasets spread_info[trade_name] = trade_spreads except Exception as e: logging.error(f"Error storing datasets and spreads for trade: {trade_name}: {e}", exc_info=True) # Calculate arbitrage opportunities for all trades at once try: logging.info("Calculating arbitrage for all trades") arbitrage_info = calculate_arbitrage_for_scenarios(trades, price_types=price_types, user_id=user_id) except Exception as e: logging.error(f"Error calculating arbitrage: {e}", exc_info=True) # Save summary and datasets to HTML, including trade descriptions try: logging.info("Saving summary and datasets to HTML") save_summary_to_html_with_datasets(arbitrage_info, datasets, spread_info, output_dir, trade_descriptions) except Exception as e: logging.error(f"Error saving summary and datasets to HTML: {e}", exc_info=True) # Optionally save to CSV as well try: logging.info("Saving summary to CSV") save_summary_to_csv(arbitrage_info, output_dir, datasets) except Exception as e: logging.error(f"Error saving summary to CSV: {e}", exc_info=True) def save_summary_to_csv(arbitrage_info, output_dir, datasets): """ Save a summary of arbitrage opportunities to a CSV file. """ summary_data = [] # Populate summary data for trade_name, arb_data in arbitrage_info.items(): for price_type, arb_value in arb_data.items(): summary_data.append({ 'Trade Name': trade_name, 'Price Type': price_type, 'Arbitrage %': arb_value }) if summary_data: df_summary = pd.DataFrame(summary_data) # Sort by Arbitrage % df_summary.sort_values(by=['Arbitrage %'], ascending=False, inplace=True) else: # If no data is found, create an empty DataFrame with appropriate columns df_summary = pd.DataFrame(columns=['Trade Name', 'Price Type', 'Arbitrage %']) summary_path = os.path.join(output_dir, "summary.csv") df_summary.to_csv(summary_path, index=False) logging.info("Summary results exported to %s", summary_path) def save_summary_to_html_with_datasets(arbitrage_info, datasets, spread_info, output_dir, trade_descriptions): """ Save a summary of arbitrage opportunities to an HTML file using Jinja2 templates, with links to the corresponding detailed datasets. """ import numpy as np # Ensure numpy is imported # Get the current time in Arizona time zone arizona_tz = pytz.timezone('America/Phoenix') run_time = datetime.now(arizona_tz).strftime('%Y-%m-%d %H:%M:%S %Z') # Prepare data to be passed to the template trades_summary = [] trades_list = [] for trade_name, arb_data in arbitrage_info.items(): # Get the description description = trade_descriptions.get(trade_name, '') # Remove the spreads from the trade name trade_name_with_spread = trade_name # Not including spreads # Prepare list of price types and arbitrage values for this trade price_types_data = [] ask_arbitrage_num = None # Initialize to store ask arbitrage value for price_type, arb_value in arb_data.items(): link_id = f"{trade_name.replace(' ', '_').replace('/', '-')}_{price_type}" try: arb_num = float(arb_value) if np.isnan(arb_num): arb_num = float('-inf') arb_str = 'NaN' else: arb_str = f"{arb_num:.2f}" except (TypeError, ValueError): arb_num = float('-inf') arb_str = 'NaN' price_types_data.append({ 'price_type': price_type, 'arbitrage': arb_str, 'arbitrage_num': arb_num, 'link_id': link_id }) # Store ask arbitrage value if price_type == 'ask': ask_arbitrage_num = arb_num # Get the dataset HTML dataset = datasets.get(trade_name, {}).get(price_type) if dataset is not None: dataset_html = dataset.to_html(index=False) else: dataset_html = "No data available for this trade and price type.

" trades_list.append({ 'trade_name': trade_name_with_spread, 'price_type': price_type, 'link_id': link_id, 'dataset_html': dataset_html }) # Sort price_types_data, ensure 'ask' is first price_types_data.sort(key=lambda x: 0 if x['price_type'] == 'ask' else 1) # Add ask_arbitrage_num to trade data trades_summary.append({ 'trade_name': trade_name_with_spread, 'description': description, 'price_types': price_types_data, 'ask_arbitrage_num': ask_arbitrage_num }) # Now sort trades_summary by 'ask_arbitrage_num' descending, handling NaN values def sort_key(x): ask_arb = x.get('ask_arbitrage_num') if ask_arb is None or np.isnan(ask_arb): return float('-inf') # Treat NaN and None as the lowest value else: return ask_arb trades_summary.sort(key=sort_key, reverse=True) # Prepare context for the template context = { 'run_time': run_time, 'trades_summary': trades_summary, 'trades': trades_list } # Load the Jinja2 template with CSS styling html_template = """Arbitrage Summary body { font-family: Arial, sans-serif; margin: 20px; background-color: #f9f9f9; } h1, h2, h3 { color: #333; } table { width: 100%; border-collapse: collapse; margin-bottom: 20px; background-color: #fff; } th, td { padding: 12px; border: 1px solid #ddd; text-align: left; } th { background-color: #f4f4f4; } .arb-positive { background-color: #d4edda !important; /* Light green */ } a { color: #3498db; text-decoration: none; } a:hover { text-decoration: underline; } .timestamp { font-size: 0.9em; color: #777; } .trade-section { margin-bottom: 30px; padding-bottom: 10px; border-bottom: 1px solid #ccc; } .trade-description { font-style: italic; margin-bottom: 10px; }Arbitrage Summary

Updated at: {{ run_time }}

{% for trade in trades_summary %}{% endfor %} {% for trade in trades %}{{ trade.trade_name }}

{% if trade.description %}{{ trade.description }}

{% endif %}Price Type Arbitrage % 0 %}arb-positive{% endif %}"> {% endfor %}{{ item.price_type }} {{ item.arbitrage }} {{ trade.trade_name }} ({{ trade.price_type }})

{{ trade.dataset_html | safe }} {% endfor %} // Auto-reload the page every 30 seconds to reflect new updates setInterval(function() { window.location.reload(true); // Force reload without using the cache }, 30000); // Reload every 30 seconds """ # Create a Jinja2 environment and render the template template = jinja2.Template(html_template) rendered_html = template.render(context) # Write to a temporary file and then replace the original file atomically summary_html_path = os.path.join(output_dir, "summary.html") import tempfile try: # Write to a temporary file with tempfile.NamedTemporaryFile('w', delete=False, dir=output_dir, prefix='summary_', suffix='.html') as tmp_file: tmp_file.write(rendered_html) temp_file_path = tmp_file.name # Atomically replace the old file with the new file os.replace(temp_file_path, summary_html_path) logging.info("Summary with datasets exported to %s", summary_html_path) except Exception as e: logging.error(f"Error saving summary HTML file: {e}", exc_info=True) def run_continuously(trades, output_dir='./strategies', include_bid=True, interval=300): """ Run the process_all_trades function every 'interval' seconds. """ while True: try: # First, update the order books logging.info("Updating order books before processing trades.") update_books_for_trades() # Run the main processing function process_all_trades(trades, output_dir=output_dir, include_bid=include_bid) # Log the completion of one iteration logging.info("Completed one iteration of process_all_trades.") # Sleep for the specified interval (300 seconds = 5 minutes) time.sleep(interval) except Exception as e: logging.error(f"An error occurred: {e}") # Sleep for a bit before trying again in case of error time.sleep(interval) # Example usage: if __name__ == "__main__": run_continuously(trades, include_bid=True)get_polygon_data.py

With this code, you can actually put in any username or wallet id and it will generate charts and graphs of that user’s trade history.